Just got a SuperMicro AS-2125HS-C TNR system here at work with dual AMD Epyc 9224 (24c/48t) processors and 24 Samsung PM9A3 7.68TB NVMe SSDs.

I'm seeing the oddest thing when I try to measure the performance of the disk array.

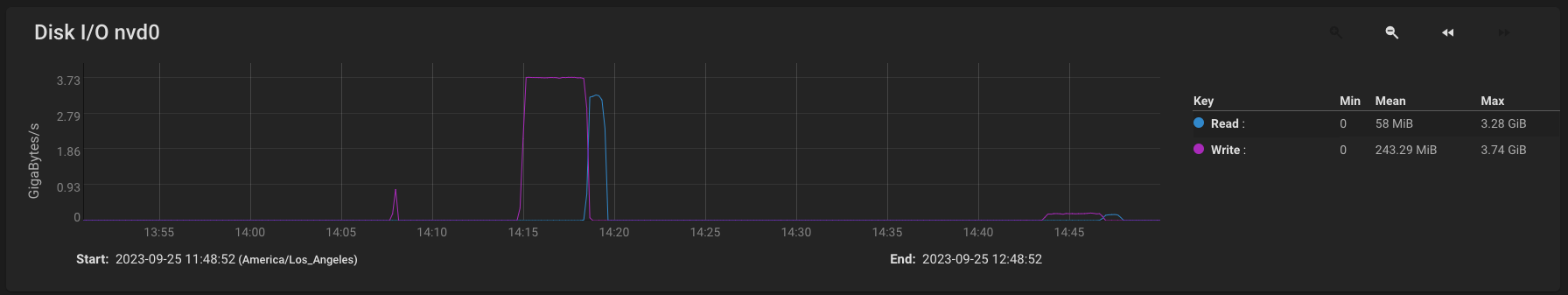

If I do a disk IO test with one drive, you can see that in the first blip around 14:15, I'm getting around 3.7GB/s-ish. The line around 14:45 is when I ran the same test with a 24-disk striped array. And the disk throughput drops to about 1/24 of one drive alone. And when I tried a striped array of two disks, the speed from each drive dropped to half. a 4-disk striped array dropped the speeds to 1/4, etc.

This is with a stock, out-of-the box TrueNAS Core install. I'm not certain if I need to make certain modifications. Is there a good primer for SSD arrays with TrueNAS? Anyone have any idea what I'm looking at (I sure don't!).

Any help, even WAGs, would be appreciated!

Thanks!

I'm seeing the oddest thing when I try to measure the performance of the disk array.

If I do a disk IO test with one drive, you can see that in the first blip around 14:15, I'm getting around 3.7GB/s-ish. The line around 14:45 is when I ran the same test with a 24-disk striped array. And the disk throughput drops to about 1/24 of one drive alone. And when I tried a striped array of two disks, the speed from each drive dropped to half. a 4-disk striped array dropped the speeds to 1/4, etc.

This is with a stock, out-of-the box TrueNAS Core install. I'm not certain if I need to make certain modifications. Is there a good primer for SSD arrays with TrueNAS? Anyone have any idea what I'm looking at (I sure don't!).

Any help, even WAGs, would be appreciated!

Thanks!