FreeNASUser5129

Dabbler

- Joined

- Feb 18, 2012

- Messages

- 15

Hi Friends,

I am running TrueNAS Core 12.0-U8.

I recently needed to replace one of my hard disks within my zpool. After replacing and resilvering, my zpool status says Unhealthy.

I ran

I saw that it is showing ZFS-8000-8A as the error, and referenced this documentation page.

Based on that page I ran

with this result:

I have done several pool scrubs, and I have run each of the 6 disks through Manual LONG S.M.A.R.T. tests. Everything completes successfully.

The action of 'destroying the pool and re-creating from a backup' seems risky and like something I may not achieve successfully. I'm hoping someone can provide some guidance for what I should do to resolve the unhealthy status?

Thanks very much.

I am running TrueNAS Core 12.0-U8.

I recently needed to replace one of my hard disks within my zpool. After replacing and resilvering, my zpool status says Unhealthy.

I ran

Code:

zpool status -x

I saw that it is showing ZFS-8000-8A as the error, and referenced this documentation page.

Based on that page I ran

Code:

zpool status -xv

with this result:

Code:

root@freenas:~ # zpool status -xv

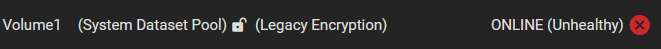

pool: Volume1

state: ONLINE

status: One or more devices has experienced an error resulting in data

corruption. Applications may be affected.

action: Restore the file in question if possible. Otherwise restore the

entire pool from backup.

see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-8A

scan: scrub repaired 0B in 06:57:34 with 15 errors on Sat Apr 9 14:07:52 2022

config:

NAME STATE READ WRITE CKSUM

Volume1 ONLINE 0 0 0

raidz1-0 ONLINE 0 0 0

gptid/7a341d28-0344-11e5-8c94-002590dbbf1d.eli ONLINE 0 0 47

gptid/84901eb6-067c-11e3-8226-d43d7e93e546.eli ONLINE 0 0 47

gptid/855049fe-067c-11e3-8226-d43d7e93e546.eli ONLINE 0 0 47

gptid/6d5b0978-aefb-11ec-a75a-002590dbbf1d.eli ONLINE 0 0 47

gptid/b52424a6-a670-11e5-a798-002590dbbf1d.eli ONLINE 0 0 47

gptid/e5c9026a-56f7-11e5-8bb4-002590dbbf1d.eli ONLINE 0 0 47

errors: Permanent errors have been detected in the following files:

/var/db/system/syslog-92e6ac8b6342418e99afb49a8441c54c/log/debug.log

/var/db/system/syslog-92e6ac8b6342418e99afb49a8441c54c/log/console.log

/var/db/system/syslog-92e6ac8b6342418e99afb49a8441c54c/log/messages

/var/db/system/syslog-92e6ac8b6342418e99afb49a8441c54c/log/daemon.log

/var/db/system/syslog-92e6ac8b6342418e99afb49a8441c54c/log/middlewared.log

/var/db/system/rrd-92e6ac8b6342418e99afb49a8441c54c/localhost/disktemp-ada1/temperature.rrd

/var/db/system/rrd-92e6ac8b6342418e99afb49a8441c54c/localhost/disktemp-ada3/temperature.rrd

I have done several pool scrubs, and I have run each of the 6 disks through Manual LONG S.M.A.R.T. tests. Everything completes successfully.

The action of 'destroying the pool and re-creating from a backup' seems risky and like something I may not achieve successfully. I'm hoping someone can provide some guidance for what I should do to resolve the unhealthy status?

Thanks very much.

Last edited: