So it's memtest86 not injecting memory errors correctly, or AMD has broken memory error injection implementation? That's really good news.

I suspect this is because of the CPU. For memory error injection to fully work, you require memory, motherboard (BIOS) and CPU to all support this. I've never seen it work on Ryzen, although the motherboard (BIOS) and memory should be ok. Also I don't think you should see this as a "broken feature". It probably was left out intentionally by AMD, as it could potentially cause security issues if I remember well and it isn't required for the normal functioning of the computer.

I am happy to report ECC reporting functional on at least 2 mobo's.

Setups tested so far

* AMD Ryzen 9 3950x & ASUS Prime X570-P

* AMD Ryzen 9 3950x & Asrock rack X470D4U

For me the X470D4U was the most important so I don't think I will continue checking the other boards.

The method I used was to use the inner wires of some electrical cable and stick it in the memory bank with 8GB ECC UDIMM in it. I understood that FreeNAS does not support ECC reporting if I understood correctly so I tried

using proxmox

root@pve:~# pveversion

pve-manager/6.1-8/806edfe1 (running kernel: 5.4.24-1-pve)

I saw the report of corrected errors. From the 2 boards only the X470D4U has IPMI as far as I could tell but the IPMI log is not showing any ecc errors :( which is a mayor oversight from the manufacturer if you ask me. Let's hope they can fix that in an update.

Although I'm very happy to hear that Diversity managed to see reporting of ECC Errors on the Asrock Rack X470D4U when using a Ryzen 3950 (Zen 2), I'm also confused and puzzled to what this means for my failing experiences to achieve the same...

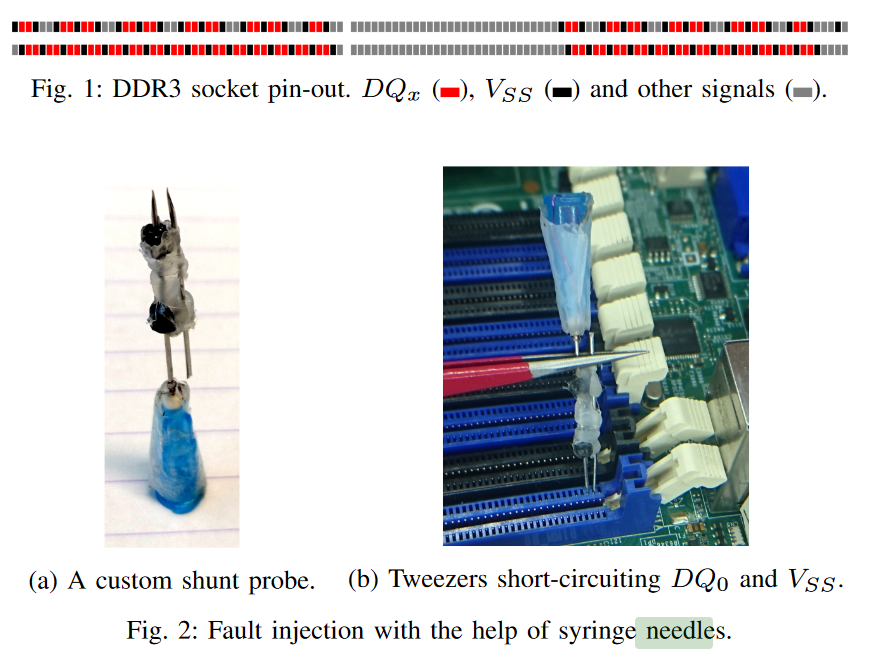

Causing memory errors by shorting pins of memory modules

Firstly I did some research on this "Triggering memory errors using 'needles' or wires"-approach, which Diversity has used. I didn't look into this method yet, as it seemed too risky and I didn't want to damage or degrade any of my hardware.

I found a good (but complicated) description in the following paper:

Error injection with a shunt probe.To reduce noise and cross-talk between high-speed signals, data pins of the DDR DIMM (DQx) are physically placed next to a ground (VSS) signal. As the ground plane (VSS) has a very low impedance compared to the data signal and because the signal driver is (pseudo) open drain, short-circuiting the VSS and DQx signals will pull DQx from its high voltage level to “0”. Depending on the encoding of the high voltage, this short-circuiting results in a 1-to-0 or 0-to-1 bit flip on a given DQx line.Figure 1 displays the locations of the important signals andshows that a DQx signal is always adjacent to a VSS signal.There fore, to inject a single correctable bit error, while the system exercises the memory by writing and reading all ones,we have to short-circuit a DQx signal with VSS. We can achieve the short-circuiting effect with the help of a custom-built shunt probe using syringe needles (Figure 2a). We insert the probe in the holes of the DIMM socket as shown in Figure 2b. For clarity, we omit the memory module from the picture. We then use tweezers to control when the error is injected by shorts-circuiting the two needles and thus the targeted DQx and nearby VSS signal. This method, while simple (and cheap), is effective in the case of a memory controller that computes ECCs in a single memory transaction(ECC word size is 64 bits) and can be used instead of expensive ad-hoc equipment [30], [31]. On some systems (e.g., configuration AMD-1) data is retrieved in two memory transactions and then interleaved. Because of the low temporal accuracy of the shunt probe method, an error inserted on memory line DQk (0≤k <64) that appears on data bit 2*k will also “reflect” on data bit 2*k+1 inside the 128 bit ECC word. In this case the syndrome corresponds to two bit errors and contradicts Proposition 1. To ensure single bit errors, once the interleaved mechanism is understood, the exercising data can be constructed such that the reflected positions contain only bits that are encoded tolow voltage, essentially masking the reflections.

So in short, you're connecting a data-pin with a ground-pin, so that the current on the data-pin "flows away" into the ground-pin and this "flips a bit". When using the correct pins and not accidently shorting anything wrong, this should actually be "reasonably safe" to do I think... (please correct me if I'm wrong :) )

Why is this causing single-bit errors and not multi-bit errors? I THINK because every "clock tick" data is pulled from each data-pin of the memory module. So if you change only the "result" of 1 pin, you get a maximum 1 bit flipped per "clock tick", which ECC can then correct. (not sure though)

This paper is already a bit older and was using DDR3. The 'AMD-1' configuration they are talking about (where interleaving is making things complicated) is an 'AMD Opteron 6376 (Bulldozer (15h))'.

As far as I understand, the extra complexity of "interleaving", that happens on the Opteron system, is only applicable on Ryzen, when using Dual Rank memory. As Diversity was using a single 8GB module, I suppose he only has single rank memory, so wasn't confronted with the "interleaving-complexity". However, if I would try this with my 16GB modules, I would be confronted with this extra complexity, because ?all? 16GB modules are dual rank...

I concluded this from this article (but I could misinterpreting things!):

https://www.reddit.com/r/Amd/comments/6nzjeb/optimising_ryzen_cpu_memory_clocks_for_better/

RankInterleaving: auto (left on default, untested; should only be toggled with Dual Rank Memory* )

As the paper was for DDR3, you of course need find the pin layout of DDR4, before you can apply it on Ryzen. Following datasheet has pretty clear pin layout of unregistered ECC DDR4 module:

On page 6, 7 and 8 you can see the description per pin and on page 17 you can see a picture of where those pin numbers are on the memory module. I suppose all VSS-pins are ground pins and DQ+number pins are data pins. So if we follow the example of the paper and short DQ0 with a VSS, the corresponds to shorting pin-4 (VSS) with pin-5 (DQ0). But I guess shorting pin-2 (VSS) with pin-3 (DQ4) could work equally fine.

This should help us "understand" a bit better what Diversity has done and how to "safely" reproduce it.

The results from Diversity

I was in contact with Diversity and have some more details on his testing (all pictures in this "chapter" are from Diversity himself). Diversity used the following video:

https://www.youtube.com/watch?v=_npcmCxH2Ig. Instead of needles and tweezers, he used a thin wire, as in the picture below.

He was able to trigger "ECC errors" in Memtest86 and "Corrected errors" in Linux (Proxmox).

My testing / experiences

As you know, I also tried triggering reporting of corrected memory errors. I tried this by overclocking / undervolting the memory to the point where it is on the edge of stability. This edge is very "thin", can be hard to reach and can result in the following scenarios in my understanding:

1) Not unstable enough, so no errors at all

2) Only just unstable enough, so that single-bit error occurs only sometimes when stressing the memory enough. These will then be corrected by ECC and will not cause faults or crashes.

3) A little more unstable, so that single-bit errors occur a bit more often and less stress is required on the memory to achieve this. But also (uncorrected) multi-bit errors can occur sometimes, which could cause faults / crashes.

4) Even a little bit more unstable, so that mostly multi-bit errors occur when stressing the memory and single bit errors might be rare. This also makes the system more prone to faults and crashes.

5) Even more unstable, so the multi-bit errors occur even when hardly stressing the memory at all. This makes the system very unstable and probably will not be able to boot into OS all the time.

6) Too unstable, so that it doesn't boot at all.

Both scenario 2) and 3) are "good enough" for testing reporting of corrected memory errors. Perhaps even scenario 4), if you're lucky...

During all my testing I tried 100+ possible memory settings, using all kinds of frequencies, timings and voltages, of which 10-30 were potentially in scenario 2) or 3). I "PARTLY" kept track of all testing in the below (incomplete) Excel:

This convinced me that I should have at least once been in scenario 2) or 3), where I should have seen corrected errors (but didn't). That is why I concluded that it didn't work and I contacted Asrock Rack and AMD to work on this.

Conclusions / Questions / Concerns

Now what does all of this means? Does this mean that I never reached scenario 2) or 3)? Does it mean scenario 2) and 3) are almost impossible to reach using the methods I tried? Or does it mean that Diversity perhaps triggered a different kind of memory error? I'm not sure and I hope someone can clarify...

I know there is error correction and reporting happening on many layers in a modern computer. As far as I know, there are these:

1) Inside the memory modules itself (only when you have ECC modules). The memory module then has an extra chip on the module to store checksums. I think this works similar to RAID5 for HDDs. So that a memory error is detected and corrected in the module itself, even before it exits the memory module.

2) On the way from the memory module to the memory controller on the CPU (databus). Error detecting / correcting / reporting for these kinds of errors are handled by the memory controller in the CPU, so ECC memory isn't even required to make this work.

3) Inside the CPU data is also transfered between L1/L2/L3 caches and the CPU. Also there Error detecting / correcting / reporting is possible I think.

All of these might look confusingly similar when reported to the OS, but I do think they are often reported in a slightly different manner. I've seen reports where the CPU cache (L1/L2/L3) was clearly mentioned when reporting a corrected error for example, but I'm not sure what the exact difference between reports of 1) and 2) would be.

In Proxmox screenshots I do read things like "Unified Memory Controller..." and "Cache level: L3/GEN...", but again, I'm not entirely sure if these mean that the errors are in 2) or 3) instead of 1)...

Diversity is draining the current from a data-pin "outside" of the memory module, but I still see 2 possibilities of what's happening:

1) The drain on the data-pin is pulling the current away also inside the memory module itself, where the memory module detects the error, corrects and reports it.

2) The drain on the data-pin only happens after the memory module has done its checks and the error is only detected on the databus and corrected / reported there (so not by the ECC functionality of the memory module).

To know which scenario is happening, we could:

- try to find someone with knowledge on exactly how each type of error is reported and who can exactly identify what is being reported

- perhaps using a non-ECC single-rank module also reports the same kind of ECC errors, which would proof it is happening on the databus.

- perhaps someone with more (I don't have any actually) electrical engineering knowledge can also say something more meaningful than myself?

Diversity did mention that his IPMI log was still empty after getting those errors. So there the motherboard / IPMI is certainly missing some required functionality.