I need help badly been struggling for few weeks trying to figure out what went wrong.

When I try to import I get following message and the system just hangs.

WARNING: Pool 'main-pool' has encountered an uncorrectable I/O failure and has been suspended.

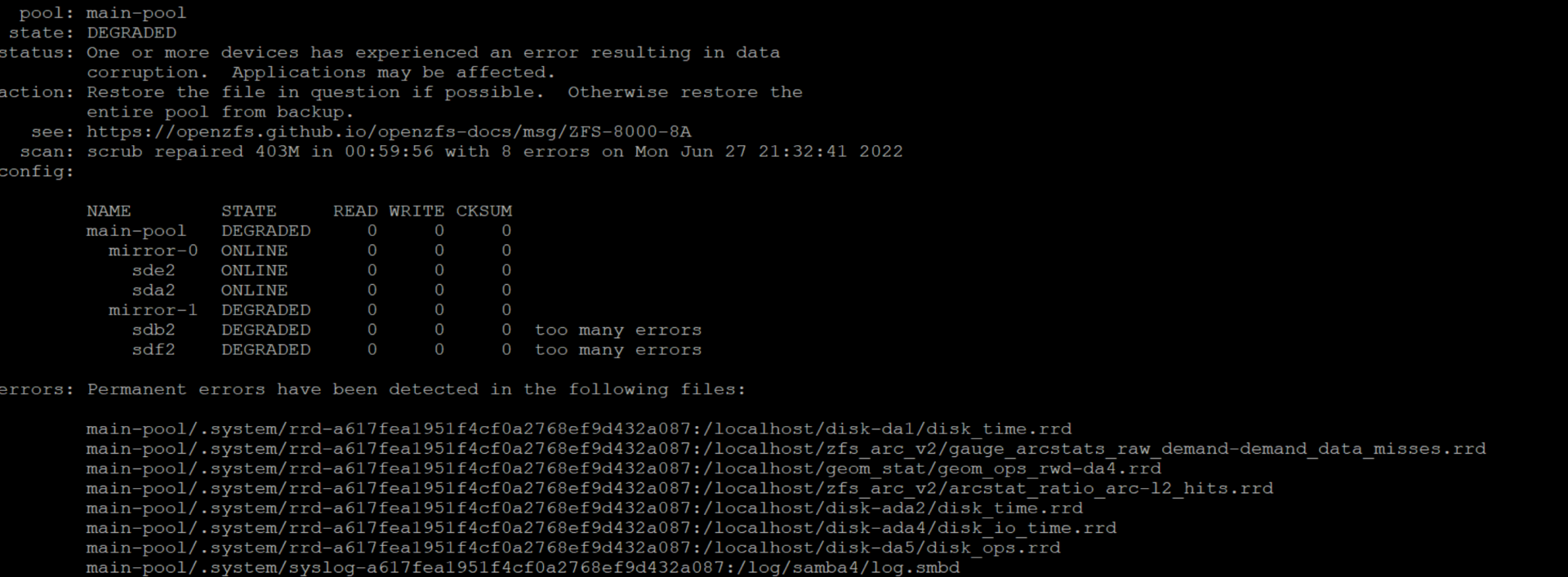

After researching I found I can use 'zpool import -f main-pool -o readonly=on' to see. In the GUI I can see the snapshots are still intact but I get the following results I try 'zpool status -v'

/proc/spl/kstat/zfs/dbgmsg:

iostat -h 3 when zfs import -f main-pool hangs:

I tried various methods after reading forums but nothing is working.

Should I just accept their is no way of recovering or can it still be salvaged ?

When I try to import I get following message and the system just hangs.

WARNING: Pool 'main-pool' has encountered an uncorrectable I/O failure and has been suspended.

After researching I found I can use 'zpool import -f main-pool -o readonly=on' to see. In the GUI I can see the snapshots are still intact but I get the following results I try 'zpool status -v'

/proc/spl/kstat/zfs/dbgmsg:

Code:

timestamp message 1657532534 spa.c:8356:spa_async_request(): spa=$import async request task=2048 1657532534 spa_misc.c:418:spa_load_note(): spa_load($import, config trusted): LOADED 1657532534 spa_misc.c:418:spa_load_note(): spa_load($import, config trusted): UNLOADING 1657532534 spa.c:6096:spa_import(): spa_import: importing main-pool 1657532534 spa_misc.c:418:spa_load_note(): spa_load(main-pool, config trusted): LOADING 1657532534 vdev.c:152:vdev_dbgmsg(): disk vdev '/dev/sde2': best uberblock found for spa main-pool. txg 2797077 1657532534 spa_misc.c:418:spa_load_note(): spa_load(main-pool, config untrusted): using uberblock with txg=2797077 1657532534 vdev.c:2375:vdev_copy_path_impl(): vdev_copy_path: vdev 812649440180795162: path changed from '/dev/gptid/b710b131-b6c6-4d51-8396-033aceac77e1' to '/dev/sde2' 1657532534 vdev.c:2375:vdev_copy_path_impl(): vdev_copy_path: vdev 15431760524353817270: path changed from '/dev/gptid/ad246f60-dfa8-47e2-a8dd-499fa7e26fbe' to '/dev/sda2' 1657532534 vdev.c:2375:vdev_copy_path_impl(): vdev_copy_path: vdev 408973825519044983: path changed from '/dev/ada5p2' to '/dev/sdb2' 1657532534 vdev.c:2375:vdev_copy_path_impl(): vdev_copy_path: vdev 5947065650119764947: path changed from '/dev/gptid/96e51a41-d652-4fa3-8875-b610340cd31c' to '/dev/sdf2' 1657532534 spa_misc.c:418:spa_load_note(): spa_load(main-pool, config trusted): spa_load_verify found 0 metadata errors and 6 data errors 1657532534 spa.c:8356:spa_async_request(): spa=main-pool async request task=2048 1657532534 spa_misc.c:418:spa_load_note(): spa_load(main-pool, config trusted): LOADED 1657532534 spa.c:8356:spa_async_request(): spa=main-pool async request task=32 1657532951 spa_misc.c:418:spa_load_note(): spa_load(main-pool, config trusted): UNLOADING 1657533074 spa.c:6240:spa_tryimport(): spa_tryimport: importing tank 1657533074 spa_misc.c:418:spa_load_note(): spa_load($import, config trusted): LOADING 1657533074 vdev.c:152:vdev_dbgmsg(): disk vdev '/dev/disk/by-partuuid/168e1285-00e1-11ed-8158-3cfdfe6bc5f0': best uberblock found for spa $import. txg 918 1657533074 spa_misc.c:418:spa_load_note(): spa_load($import, config untrusted): using uberblock with txg=918 1657533074 vdev.c:2375:vdev_copy_path_impl(): vdev_copy_path: vdev 11779842602659169114: path changed from '/dev/gptid/169657af-00e1-11ed-8158-3cfdfe6bc5f0' to '/dev/disk/by-partuuid/169657af-0 1657533074 vdev.c:2375:vdev_copy_path_impl(): vdev_copy_path: vdev 5281776993533731353: path changed from '/dev/gptid/168e1285-00e1-11ed-8158-3cfdfe6bc5f0' to '/dev/disk/by-partuuid/168e1285-00 1657533074 spa.c:8356:spa_async_request(): spa=$import async request task=2048 1657533074 spa_misc.c:418:spa_load_note(): spa_load($import, config trusted): LOADED 1657533074 spa_misc.c:418:spa_load_note(): spa_load($import, config trusted): UNLOADING 1657533074 spa.c:6240:spa_tryimport(): spa_tryimport: importing main-pool 1657533074 spa_misc.c:418:spa_load_note(): spa_load($import, config trusted): LOADING 1657533074 vdev.c:152:vdev_dbgmsg(): disk vdev '/dev/disk/by-partuuid/b710b131-b6c6-4d51-8396-033aceac77e1': best uberblock found for spa $import. txg 2797077 1657533074 spa_misc.c:418:spa_load_note(): spa_load($import, config untrusted): using uberblock with txg=2797077 1657533074 vdev.c:2375:vdev_copy_path_impl(): vdev_copy_path: vdev 812649440180795162: path changed from '/dev/gptid/b710b131-b6c6-4d51-8396-033aceac77e1' to '/dev/disk/by-partuuid/b710b131-b6c 1657533074 vdev.c:2375:vdev_copy_path_impl(): vdev_copy_path: vdev 15431760524353817270: path changed from '/dev/gptid/ad246f60-dfa8-47e2-a8dd-499fa7e26fbe' to '/dev/disk/by-partuuid/ad246f60-d 1657533074 vdev.c:2375:vdev_copy_path_impl(): vdev_copy_path: vdev 408973825519044983: path changed from '/dev/ada5p2' to '/dev/disk/by-partuuid/0fe8ae3b-ed23-4fbc-a98d-24f5a6a389ce' 1657533074 vdev.c:2375:vdev_copy_path_impl(): vdev_copy_path: vdev 5947065650119764947: path changed from '/dev/gptid/96e51a41-d652-4fa3-8875-b610340cd31c' to '/dev/disk/by-partuuid/96e51a41-d6 1657533074 spa.c:8356:spa_async_request(): spa=$import async request task=2048 1657533074 spa_misc.c:418:spa_load_note(): spa_load($import, config trusted): LOADED 1657533074 spa_misc.c:418:spa_load_note(): spa_load($import, config trusted): UNLOADING 1657533081 spa.c:6240:spa_tryimport(): spa_tryimport: importing tank 1657533081 spa_misc.c:418:spa_load_note(): spa_load($import, config trusted): LOADING 1657533081 vdev.c:152:vdev_dbgmsg(): disk vdev '/dev/disk/by-partuuid/169657af-00e1-11ed-8158-3cfdfe6bc5f0': best uberblock found for spa $import. txg 918 1657533081 spa_misc.c:418:spa_load_note(): spa_load($import, config untrusted): using uberblock with txg=918 1657533081 vdev.c:2375:vdev_copy_path_impl(): vdev_copy_path: vdev 11779842602659169114: path changed from '/dev/gptid/169657af-00e1-11ed-8158-3cfdfe6bc5f0' to '/dev/disk/by-partuuid/169657af-0 1657533081 vdev.c:2375:vdev_copy_path_impl(): vdev_copy_path: vdev 5281776993533731353: path changed from '/dev/gptid/168e1285-00e1-11ed-8158-3cfdfe6bc5f0' to '/dev/disk/by-partuuid/168e1285-00 1657533081 spa.c:8356:spa_async_request(): spa=$import async request task=2048 1657533081 spa_misc.c:418:spa_load_note(): spa_load($import, config trusted): LOADED 1657533081 spa_misc.c:418:spa_load_note(): spa_load($import, config trusted): UNLOADING 1657533081 spa.c:6240:spa_tryimport(): spa_tryimport: importing main-pool 1657533081 spa_misc.c:418:spa_load_note(): spa_load($import, config trusted): LOADING 1657533081 vdev.c:152:vdev_dbgmsg(): disk vdev '/dev/disk/by-partuuid/b710b131-b6c6-4d51-8396-033aceac77e1': best uberblock found for spa $import. txg 2797077 1657533081 spa_misc.c:418:spa_load_note(): spa_load($import, config untrusted): using uberblock with txg=2797077 1657533081 vdev.c:2375:vdev_copy_path_impl(): vdev_copy_path: vdev 812649440180795162: path changed from '/dev/gptid/b710b131-b6c6-4d51-8396-033aceac77e1' to '/dev/disk/by-partuuid/b710b131-b6c 1657533081 vdev.c:2375:vdev_copy_path_impl(): vdev_copy_path: vdev 15431760524353817270: path changed from '/dev/gptid/ad246f60-dfa8-47e2-a8dd-499fa7e26fbe' to '/dev/disk/by-partuuid/ad246f60-d 1657533081 vdev.c:2375:vdev_copy_path_impl(): vdev_copy_path: vdev 408973825519044983: path changed from '/dev/ada5p2' to '/dev/disk/by-partuuid/0fe8ae3b-ed23-4fbc-a98d-24f5a6a389ce' 1657533081 vdev.c:2375:vdev_copy_path_impl(): vdev_copy_path: vdev 5947065650119764947: path changed from '/dev/gptid/96e51a41-d652-4fa3-8875-b610340cd31c' to '/dev/disk/by-partuuid/96e51a41-d6 1657533081 spa.c:8356:spa_async_request(): spa=$import async request task=2048 1657533081 spa_misc.c:418:spa_load_note(): spa_load($import, config trusted): LOADED 1657533081 spa_misc.c:418:spa_load_note(): spa_load($import, config trusted): UNLOADING 1657533081 spa.c:6096:spa_import(): spa_import: importing main-pool 1657533081 spa_misc.c:418:spa_load_note(): spa_load(main-pool, config trusted): LOADING 1657533081 vdev.c:152:vdev_dbgmsg(): disk vdev '/dev/disk/by-partuuid/b710b131-b6c6-4d51-8396-033aceac77e1': best uberblock found for spa main-pool. txg 2797077 1657533081 spa_misc.c:418:spa_load_note(): spa_load(main-pool, config untrusted): using uberblock with txg=2797077 1657533081 vdev.c:2375:vdev_copy_path_impl(): vdev_copy_path: vdev 812649440180795162: path changed from '/dev/gptid/b710b131-b6c6-4d51-8396-033aceac77e1' to '/dev/disk/by-partuuid/b710b131-b6c 1657533081 vdev.c:2375:vdev_copy_path_impl(): vdev_copy_path: vdev 15431760524353817270: path changed from '/dev/gptid/ad246f60-dfa8-47e2-a8dd-499fa7e26fbe' to '/dev/disk/by-partuuid/ad246f60-d 1657533081 vdev.c:2375:vdev_copy_path_impl(): vdev_copy_path: vdev 408973825519044983: path changed from '/dev/ada5p2' to '/dev/disk/by-partuuid/0fe8ae3b-ed23-4fbc-a98d-24f5a6a389ce' 1657533081 vdev.c:2375:vdev_copy_path_impl(): vdev_copy_path: vdev 5947065650119764947: path changed from '/dev/gptid/96e51a41-d652-4fa3-8875-b610340cd31c' to '/dev/disk/by-partuuid/96e51a41-d6 1657533081 spa_misc.c:418:spa_load_note(): spa_load(main-pool, config trusted): read 20 log space maps (20 total blocks - blksz = 131072 bytes) in 1 ms 1657533081 mmp.c:240:mmp_thread_start(): MMP thread started pool 'main-pool' gethrtime 970889996610 1657533081 metaslab.c:2436:metaslab_load_impl(): metaslab_load: txg 2797078, spa main-pool, vdev_id 0, ms_id 13, smp_length 72968, unflushed_allocs 1564672, unflushed_frees 421888, freed 0, def099042816, max size error 17098960896, old_weight 840000000000001, new_weight 840000000000001 1657533081 metaslab.c:2436:metaslab_load_impl(): metaslab_load: txg 2797078, spa main-pool, vdev_id 1, ms_id 5, smp_length 171008, unflushed_allocs 0, unflushed_frees 86016, freed 0, defer 0 + 84, max size error 13497503744, old_weight 840000000000001, new_weight 840000000000001 1657533081 metaslab.c:2436:metaslab_load_impl(): metaslab_load: txg 2797078, spa main-pool, vdev_id 0, ms_id 14, smp_length 172560, unflushed_allocs 1388544, unflushed_frees 405504, freed 0, de6831516672, max size error 16831438848, old_weight 840000000000001, new_weight 840000000000001 1657533081 metaslab.c:2436:metaslab_load_impl(): metaslab_load: txg 2797078, spa main-pool, vdev_id 1, ms_id 13, smp_length 257008, unflushed_allocs 1323008, unflushed_frees 53248, freed 0, def281754624, max size error 10281738240, old_weight 840000000000001, new_weight 840000000000001 1657533081 metaslab.c:2436:metaslab_load_impl(): metaslab_load: txg 2797078, spa main-pool, vdev_id 0, ms_id 15, smp_length 192496, unflushed_allocs 36864, unflushed_frees 118784, freed 0, defe70699264, max size error 16770674688, old_weight 840000000000001, new_weight 840000000000001 1657533081 metaslab.c:2436:metaslab_load_impl(): metaslab_load: txg 2797078, spa main-pool, vdev_id 0, ms_id 108, smp_length 379680, unflushed_allocs 90112, unflushed_frees 122880, freed 0, def3560823808, max size error 13560766464, old_weight 840000000000001, new_weight 840000000000001 1657533081 metaslab.c:2436:metaslab_load_impl(): metaslab_load: txg 2797078, spa main-pool, vdev_id 1, ms_id 16, smp_length 105552, unflushed_allocs 24576, unflushed_frees 135168, freed 0, defe14406912, max size error 17114345472, old_weight 840000000000001, new_weight 840000000000001 1657533081 metaslab.c:2436:metaslab_load_impl(): metaslab_load: txg 2797078, spa main-pool, vdev_id 0, ms_id 109, smp_length 127152, unflushed_allocs 159744, unflushed_frees 36864, freed 0, def939684352, max size error 11939667968, old_weight 840000000000001, new_weight 840000000000001 1657533081 metaslab.c:2436:metaslab_load_impl(): metaslab_load: txg 2797078, spa main-pool, vdev_id 1, ms_id 67, smp_length 89648, unflushed_allocs 1208320, unflushed_frees 98304, freed 0, defe03343616, max size error 17103282176, old_weight 840000000000001, new_weight 840000000000001 1657533081 metaslab.c:2436:metaslab_load_impl(): metaslab_load: txg 2797078, spa main-pool, vdev_id 0, ms_id 113, smp_length 385056, unflushed_allocs 413696, unflushed_frees 106496, freed 0, de3756493824, max size error 13756428288, old_weight 840000000000001, new_weight 840000000000001 1657533081 metaslab.c:2436:metaslab_load_impl(): metaslab_load: txg 2797078, spa main-pool, vdev_id 1, ms_id 68, smp_length 105672, unflushed_allocs 1269760, unflushed_frees 143360, freed 0, de6615645184, max size error 16615571456, old_weight 840000000000001, new_weight 840000000000001

iostat -h 3 when zfs import -f main-pool hangs:

Code:

avg-cpu: %user %nice %system %iowait %steal %idle

0.0% 0.0% 0.0% 6.2% 0.0% 93.7%

tps kB_read/s kB_wrtn/s kB_dscd/s kB_read kB_wrtn kB_dscd Device

0.00 0.0k 0.0k 0.0k 0.0k 0.0k 0.0k loop0

0.00 0.0k 0.0k 0.0k 0.0k 0.0k 0.0k nvme0n1

0.00 0.0k 0.0k 0.0k 0.0k 0.0k 0.0k nvme1n1

0.00 0.0k 0.0k 0.0k 0.0k 0.0k 0.0k sda

0.00 0.0k 0.0k 0.0k 0.0k 0.0k 0.0k sdb

0.00 0.0k 0.0k 0.0k 0.0k 0.0k 0.0k sdc

0.00 0.0k 0.0k 0.0k 0.0k 0.0k 0.0k sdd

0.00 0.0k 0.0k 0.0k 0.0k 0.0k 0.0k sde

0.00 0.0k 0.0k 0.0k 0.0k 0.0k 0.0k sdf

0.00 0.0k 0.0k 0.0k 0.0k 0.0k 0.0k sdg

I tried various methods after reading forums but nothing is working.

Should I just accept their is no way of recovering or can it still be salvaged ?