This is a follow-up to my original post this past Saturday. I am starting this new thread because I have learned a lot of new information over the past few days and have made some progress but have hit a wall.

My hardware setup:

My pool setup:

What happened:

The

Now I am pretty sure my problem can be summarized with one command:

So this is where I am right now. There are some risky ideas on FreeBSD forums using the dd command but I thought I would reach out and see if anybody had any other ideas.

I will post some additional commands I ran in on subject system in a follow-up post.

My hardware setup:

Dell r720xd

128MB ecc memory

SAS9200-8e-hp JBOD controller

SAS9207-8i JBOD controller

My pool setup:

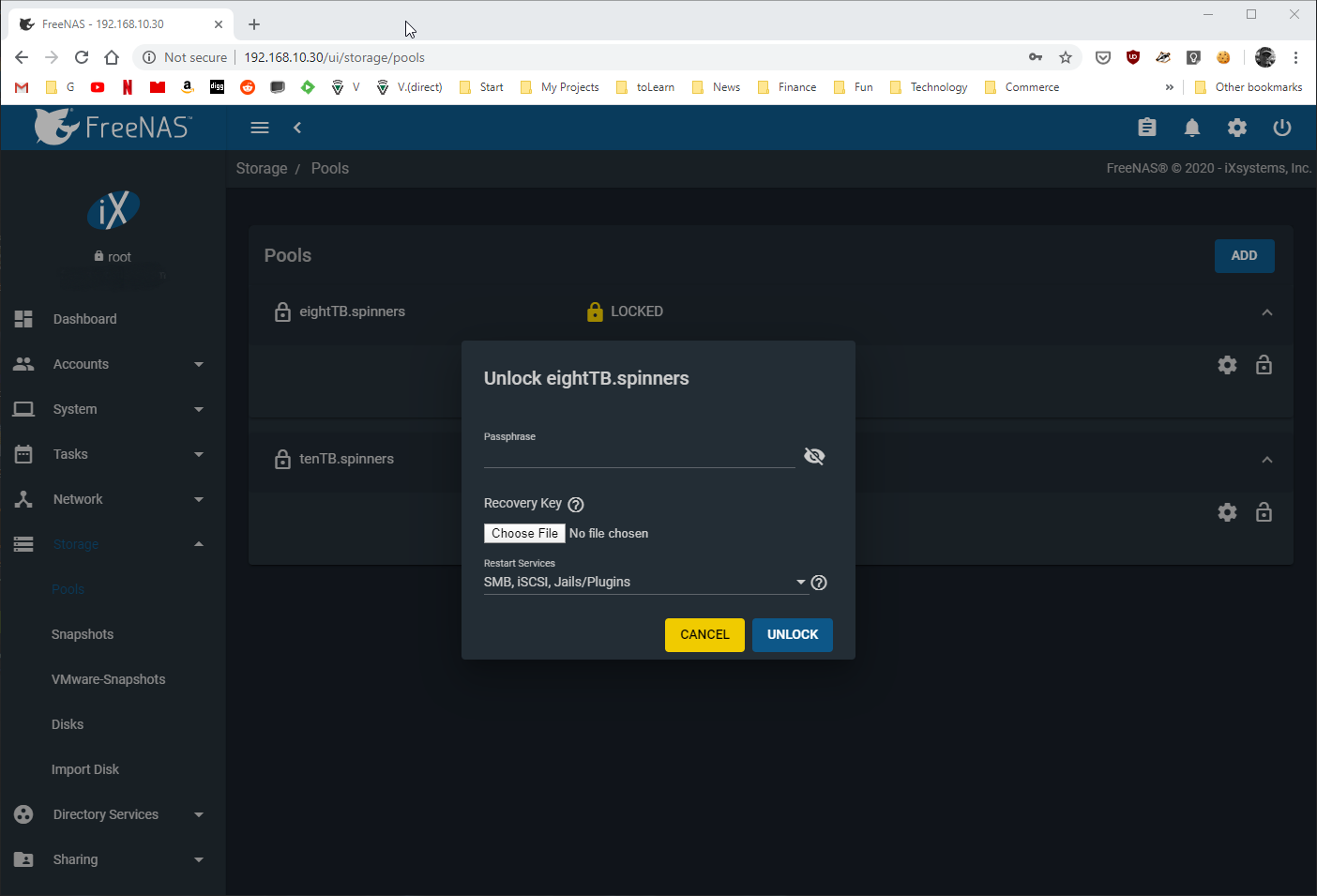

tenTB.spinners (12 10TB drives)... 2x 6-drive raid-z2 striped together | encrypted + passwordeightTB.spinners (3 8TB drives)... 1x 3-drive raid-z | encrypted + passwordfourTB.spinners... a work in progress when things went wrong | NOT encryptedWhat happened:

I extended

tenTB.spinners a few weeks ago and had plenty of space. I thought I would convert the seven drives of fourTB.spinners to a 3x mirrored vdev setup to see if there would be a performance improvement. I should mention that I was having a little difficulty because although all reported the same size in webGUI, but running geom disk list showed one was actually 4000785948160 bytes while the others were 4000787030016 bytes. There were a lot of webGUI mouse clicks on this pool first trying to start with the smaller & then adding one of the others (would not allow). It would allow 3x 2-drive mirrors striped together with the smaller drive as a spare. I didn't think rebuild would work so I think this is when I think I shutdown the machine, pulled a drive, and then started things up (but honestly memory a little cloudy here... but I DID reboot the machine).

After I was back up I pretty quickly noticed that

tenTB.spinners & eightTB.spinners were locked. This was expected and I clicked the lock button like I have done many times before so I could enter my password. This time unfortunately it threw the following error:

Code:

Error: concurrent.futures.process._RemoteTraceback:

Traceback (most recent call last):

File "/usr/local/lib/python3.7/concurrent/futures/process.py", line 239, in _process_worker

r = call_item.fn(*call_item.args, **call_item.kwargs)

File "/usr/local/lib/python3.7/site-packages/middlewared/worker.py", line 95, in main_worker

res = loop.run_until_complete(coro)

File "/usr/local/lib/python3.7/asyncio/base_events.py", line 579, in run_until_complete

return future.result()

File "/usr/local/lib/python3.7/site-packages/middlewared/worker.py", line 51, in _run

return await self._call(name, serviceobj, methodobj, params=args, job=job)

File "/usr/local/lib/python3.7/site-packages/middlewared/worker.py", line 43, in _call

return methodobj(*params)

File "/usr/local/lib/python3.7/site-packages/middlewared/worker.py", line 43, in _call

return methodobj(*params)

File "/usr/local/lib/python3.7/site-packages/middlewared/schema.py", line 964, in nf

return f(*args, **kwargs)

File "/usr/local/lib/python3.7/site-packages/middlewared/plugins/zfs.py", line 382, in import_pool

zfs.import_pool(found, found.name, options, any_host=any_host)

File "libzfs.pyx", line 369, in libzfs.ZFS.__exit__

File "/usr/local/lib/python3.7/site-packages/middlewared/plugins/zfs.py", line 380, in import_pool

raise CallError(f'Pool {name_or_guid} not found.', errno.ENOENT)

middlewared.service_exception.CallError: [ENOENT] Pool 49485231544439643 not found.

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "/usr/local/lib/python3.7/site-packages/middlewared/plugins/pool.py", line 1656, in unlock

'cachefile': ZPOOL_CACHE_FILE,

File "/usr/local/lib/python3.7/site-packages/middlewared/main.py", line 1127, in call

app=app, pipes=pipes, job_on_progress_cb=job_on_progress_cb, io_thread=True,

File "/usr/local/lib/python3.7/site-packages/middlewared/main.py", line 1074, in _call

return await self._call_worker(name, *args)

File "/usr/local/lib/python3.7/site-packages/middlewared/main.py", line 1094, in _call_worker

return await self.run_in_proc(main_worker, name, args, job)

File "/usr/local/lib/python3.7/site-packages/middlewared/main.py", line 1029, in run_in_proc

return await self.run_in_executor(self.__procpool, method, *args, **kwargs)

File "/usr/local/lib/python3.7/site-packages/middlewared/main.py", line 1003, in run_in_executor

return await loop.run_in_executor(pool, functools.partial(method, *args, **kwargs))

middlewared.service_exception.CallError: [ENOENT] Pool 49485231544439643 not found.

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/local/lib/python3.7/site-packages/middlewared/job.py", line 349, in run

await self.future

File "/usr/local/lib/python3.7/site-packages/middlewared/job.py", line 386, in __run_body

rv = await self.method(*([self] + args))

File "/usr/local/lib/python3.7/site-packages/middlewared/schema.py", line 960, in nf

return await f(*args, **kwargs)

File "/usr/local/lib/python3.7/site-packages/middlewared/plugins/pool.py", line 1668, in unlock

raise CallError(msg)

middlewared.service_exception.CallError: [EFAULT] Pool could not be imported: 3 devices failed to decrypt.

I should mention that

tenTB.spinners & eightTB.spinners were still showing up in the webGUI.I did not accidentally wipe the drives:

After trying to decrypt several times I posted under another thread with a similar problem. I want to thank the community (especially @PhiloEpisteme) but after trying those suggestions I was still stuck and started doing some additional online research & testing.

The

geli attach command:Whenever I tried this I would get a "Cannot read metadata" error:

Code:

root@freenas[~/downloads]# geli attach -k pool_eightTB.spinners_encryption.key -j pool_eightTB.spinners_encryption.pw /dev/da0 geli: Cannot read metadata from /dev/da0: Invalid argument.

I this point I decided to build a fresh 3-drive raid-z pool using three drives from the old/unused

fourTB.spinners pool. After a couple of attempts I WAS able to decrypt the drives individually:

Code:

root@freenas[~/downloads]# ls -al /dev/da* crw-r----- 1 root operator 0x9e Feb 13 23:02 /dev/da0 crw-r----- 1 root operator 0x9f Feb 13 23:02 /dev/da0p1 crw-r----- 1 root operator 0xa0 Feb 13 23:02 /dev/da0p2 crw-r----- 1 root operator 0xa1 Feb 13 23:02 /dev/da1 crw-r----- 1 root operator 0xb0 Feb 13 23:02 /dev/da1p1 crw-r----- 1 root operator 0xb1 Feb 13 23:02 /dev/da1p2 crw-r----- 1 root operator 0xa2 Feb 13 23:02 /dev/da2 crw-r----- 1 root operator 0xb2 Feb 13 23:02 /dev/da2p1 crw-r----- 1 root operator 0xb3 Feb 13 23:02 /dev/da2p2 crw-r----- 1 root operator 0xa3 Feb 13 23:02 /dev/da3 crw-r----- 1 root operator 0xb4 Feb 13 23:02 /dev/da3p1 crw-r----- 1 root operator 0xb5 Feb 13 23:02 /dev/da3p2 crw-r----- 1 root operator 0xad Feb 13 23:02 /dev/da4 crw-r----- 1 root operator 0xb6 Feb 13 23:02 /dev/da4p1 crw-r----- 1 root operator 0xb7 Feb 13 23:02 /dev/da4p2

Code:

root@freenas[~/downloads]# geli attach -k pool_fourTBs_encryption.key -j pool_fourTBs_encryption.pw /dev/da0 geli: Cannot read metadata from /dev/da0: Invalid argument. root@freenas[~/downloads]# geli attach -k pool_fourTBs_encryption.key -j pool_fourTBs_encryption.pw /dev/da0p1 geli: Cannot read metadata from /dev/da0p1: Invalid argument. root@freenas[~/downloads]# geli attach -k pool_fourTBs_encryption.key -j pool_fourTBs_encryption.pw /dev/da0p2 root@freenas[~/downloads]# geli attach -k pool_fourTBs_encryption.key -j pool_fourTBs_encryption.pw /dev/da1p2 root@freenas[~/downloads]# geli attach -k pool_fourTBs_encryption.key -j pool_fourTBs_encryption.pw /dev/da2p2

* the

pool_fourTBs_encryption.pw is a single-line text file with the password* after decrypting drives I was able to import pool in webGUI by Pool -> Add -> Import -> "No" to decrypt (select unencrypted) -> select pool in dropdown

* Note that

geli will not decrypt with /dev/da0 or /dev/da0p1 but it WILL decrypt /dev/da0p2**** The keys is the "p2" at the end ***

Now I am pretty sure my problem can be summarized with one command:

In this case (

/dev/da0, (/dev/da1 & (/dev/da2 are the three 8TB drives that make up eightTB.spinners

Code:

root@freenas[~]# ls -al /dev/da* crw-r----- 1 root operator 0x9e Feb 13 15:29 /dev/da0 crw-r----- 1 root operator 0x9f Feb 13 15:29 /dev/da1 crw-r----- 1 root operator 0xa0 Feb 13 15:29 /dev/da2 crw-r----- 1 root operator 0xa1 Feb 13 15:29 /dev/da3 crw-r----- 1 root operator 0xa3 Feb 13 15:29 /dev/da3p1 crw-r----- 1 root operator 0xa4 Feb 13 15:29 /dev/da3p2 crw-r----- 1 root operator 0xa2 Feb 13 15:29 /dev/da4 crw-r----- 1 root operator 0xae Feb 13 15:29 /dev/da4p1 crw-r----- 1 root operator 0xaf Feb 13 15:29 /dev/da4p2

Where are my *p1 & *p2 partitions??

The 3 drives that make up

eightTB.spinners and the 12 drives that make up tenTB.spinners are missing these partitions. What the heck!!

I searched/learned a little about GPT partitions and there is a command to recover lost partitions:

gpart recoverUnfortunately I either don't know how to use it correctly or it will not work for me:

Code:

root@freenas[~]# gpart recover /dev/da0 gpart: arg0 'da0': Invalid argument

So this is where I am right now. There are some risky ideas on FreeBSD forums using the dd command but I thought I would reach out and see if anybody had any other ideas.

I will post some additional commands I ran in on subject system in a follow-up post.

Last edited: