ArsenalNAS

Cadet

- Joined

- Apr 21, 2022

- Messages

- 3

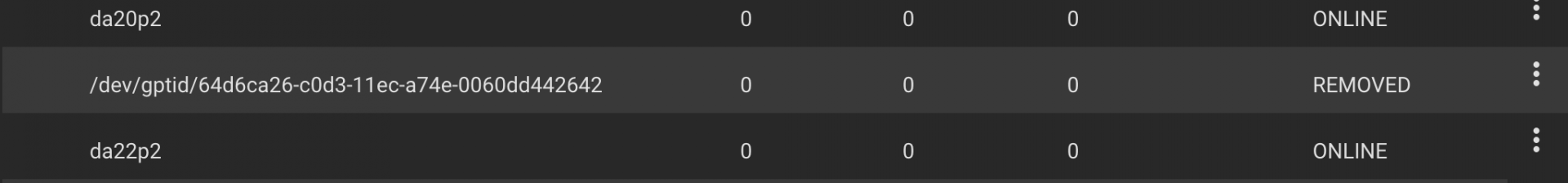

I am new to this forum. I am running TrueNAS CORE 12.0 U6 with 22 drives (2 groups of 11). I have replaced drives previously, but I am not running into a problem with rebuilding this time around. The usage is high (~95%) and I have now have 2 troubled drives in the pool. Both questionable drives are in the same group. When I make attempts to offline and replace the drive with a new drive, the process looks standard. I see the new drive show up as the replacement pulldown. I start the replacement process (resilver), and shortly there after the system pauses and then continues. If I refresh after that pause, the replacement disk is not listed as "REMOVED" with the GPID number string listed.

I have not discovered a process to get the system to make another attempt at using the replacement disk. The system has labeled that failed disk as REMOVED and will not proceed, if it the UI has been refreshed. The drive has been marked out. If I put in another drive, I can start the process again. However, I have done that, and the 2nd replaced disk did the same error.

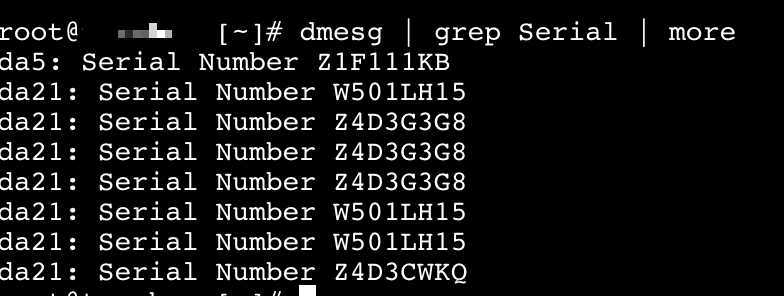

When I look at the console and look at the disk serial history (da21), I can see the different disks that failed the attempts.

I would appreciate any insights into how to best resolve the issue.

I have not discovered a process to get the system to make another attempt at using the replacement disk. The system has labeled that failed disk as REMOVED and will not proceed, if it the UI has been refreshed. The drive has been marked out. If I put in another drive, I can start the process again. However, I have done that, and the 2nd replaced disk did the same error.

When I look at the console and look at the disk serial history (da21), I can see the different disks that failed the attempts.

I would appreciate any insights into how to best resolve the issue.