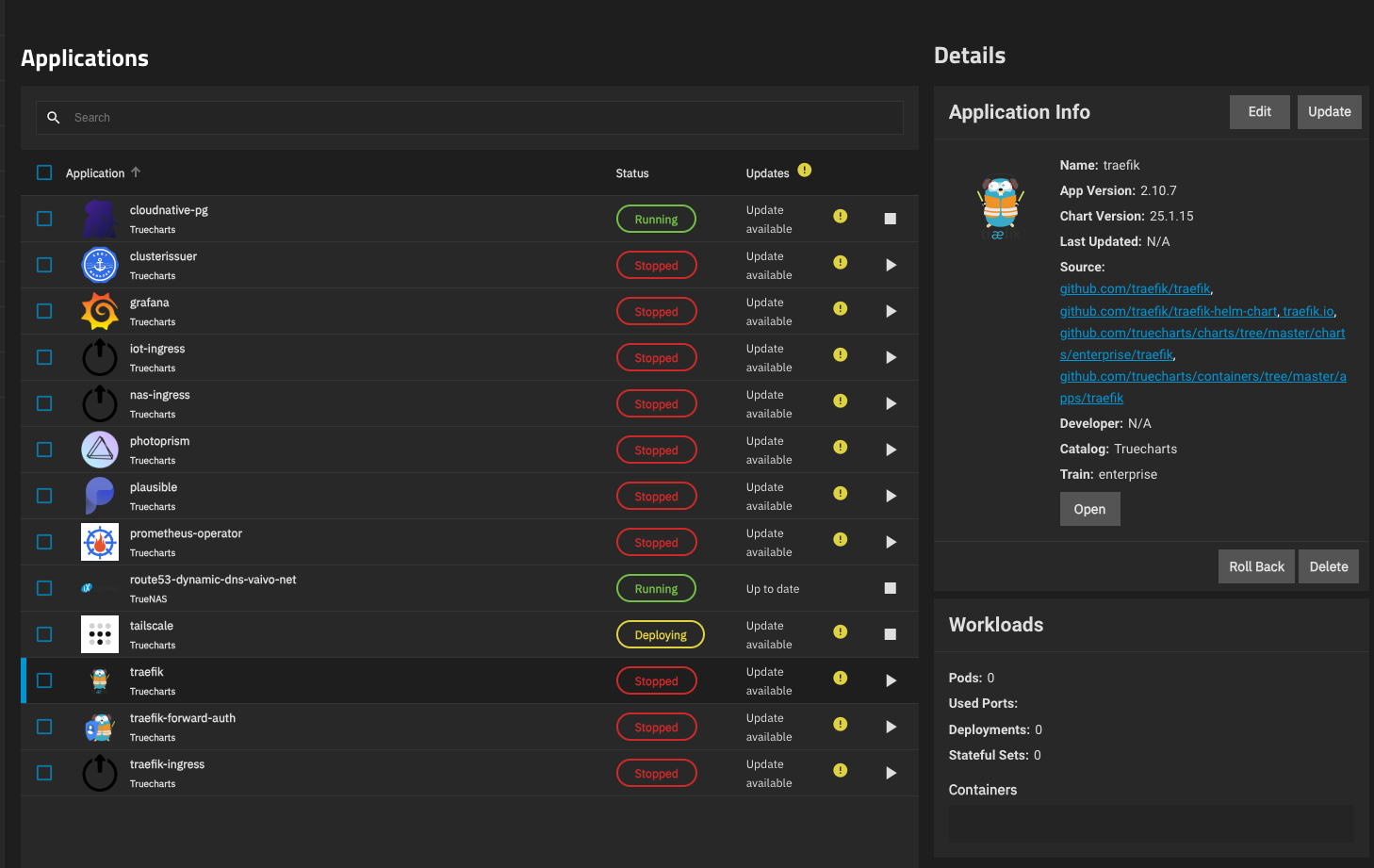

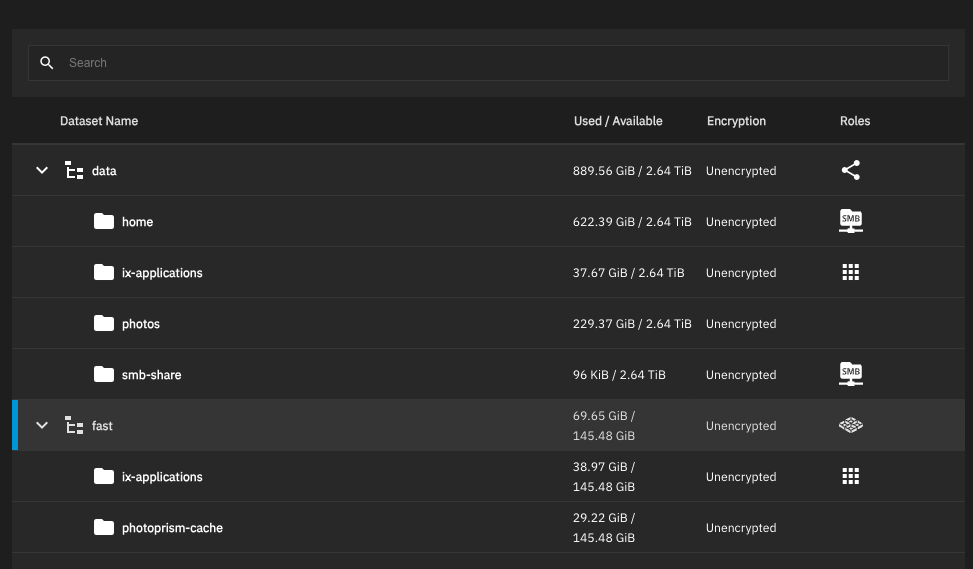

I created a new pool, and wanted to migrate my applications there. I went through the standard process, changing the pool and checked the "migrate applications" checkbox.

The new pool was created, and I see apps in the apps list, however they're not running. I checked kubernetes, I have all the namespaces, but they don't contain the manifests: no deployments, no pods,

Fortunately, switching back to the old pool `data` still works, so I didn't lose my entire setup, but I want to migrate to the pool `fast`. I tried replicating the dataset myself before migrating, but then zfs can't mount it for k3s. I tried migrating, unsetting the pool, creating an additional replication task, and then starting again, with no luck either.

I'd appreaciate any ideas, because I can't see what could be going on.

The new pool was created, and I see apps in the apps list, however they're not running. I checked kubernetes, I have all the namespaces, but they don't contain the manifests: no deployments, no pods,

Code:

root@nas[/mnt/fast/ix-applications/k3s/agent]# k3s kubectl get ns NAME STATUS AGE default Active 28m kube-system Active 28m kube-public Active 28m kube-node-lease Active 28m openebs Active 28m ix-nas-ingress Active 28m ix-photoprism Active 28m ix-prometheus-operator Active 28m ix-cloudnative-pg Active 28m ix-plausible Active 28m ix-tailscale Active 28m ix-route53-dynamic-dns-vaivo-net Active 28m ix-traefik-forward-auth Active 28m ix-clusterissuer Active 28m ix-grafana Active 28m ix-traefik-ingress Active 28m ix-traefik Active 28m ix-iot-ingress Active 28m root@nas[/mnt/fast/ix-applications/k3s/agent]# k3s kubectl -n kube-system get pods NAME READY STATUS RESTARTS AGE csi-nfs-node-x65hr 3/3 Running 6 (13m ago) 28m csi-smb-node-lwf2f 3/3 Running 6 (13m ago) 28m snapshot-controller-546868dfb4-88l2f 1/1 Running 0 15m snapshot-controller-546868dfb4-xw9tx 1/1 Running 0 15m csi-smb-controller-7fbbb8fb6f-q5f7z 3/3 Running 0 15m csi-nfs-controller-7b74694749-pgq84 4/4 Running 0 15m intel-gpu-plugin-86gg9 1/1 Running 0 12m openebs-zfs-node-9nzrm 2/2 Running 0 12m openebs-zfs-controller-0 5/5 Running 0 12m coredns-59b4f5bbd5-t8jw2 1/1 Running 0 15m root@nas[/mnt/fast/ix-applications/k3s/agent]# k3s kubectl -n ix-traefik get all No resources found in ix-traefik namespace. root@nas[/mnt/fast/ix-applications/k3s/agent]# k3s kubectl -n ix-photoprism get pvc No resources found in ix-photoprism namespace. root@nas[/mnt/fast/ix-applications/k3s/agent]# k3s kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE pvc-f429f3a6-bbbc-485e-a862-6b23003ea2a1 100Gi RWO Retain Available ix-photoprism/photoprism-mariadb-data ix-storage-class-photoprism 29m pvc-3611bb0f-d109-4728-b082-fc8f65c5dce8 256Gi RWO Retain Available ix-photoprism/photoprism-photoprismtemp ix-storage-class-photoprism 29m pvc-814e2113-e051-4407-af4c-8f9a690bb565 256Gi RWO Retain Available ix-photoprism/photoprism-storage ix-storage-class-photoprism 29m pvc-e9fc01d8-275a-4577-9aff-6e3751164d29 256Gi RWO Retain Available ix-photoprism/photoprism-import ix-storage-class-photoprism 29m pvc-a8ed48ba-4543-4426-9e2b-3334cb64800b 256Gi RWO Retain Available ix-photoprism/photoprism-originals ix-storage-class-photoprism 29m pvc-ca539be9-082b-4d62-9bc7-80b03fc58d23 100Gi RWO Retain Available ix-plausible/plausible-clickhouse-data ix-storage-class-plausible 29m pvc-fe65693a-548a-4bec-a2ce-cab002573a2b 10Gi RWO Retain Available ix-plausible/plausible-cnpg-main-1-wal ix-storage-class-plausible 29m pvc-50be389c-2e74-4ec9-a33c-d9ea841f7e90 10Gi RWO Retain Available ix-plausible/plausible-cnpg-main-1 ix-storage-class-plausible 29m pvc-f761419a-b0f0-42ae-804b-43bd951c9f08 4Gi RWO Retain Available ix-grafana/grafana-data ix-storage-class-grafana 29m

Fortunately, switching back to the old pool `data` still works, so I didn't lose my entire setup, but I want to migrate to the pool `fast`. I tried replicating the dataset myself before migrating, but then zfs can't mount it for k3s. I tried migrating, unsetting the pool, creating an additional replication task, and then starting again, with no luck either.

Code:

root@nas[/mnt/fast/ix-applications/k3s/agent]# service k3s status

● k3s.service - Lightweight Kubernetes

Loaded: loaded (/lib/systemd/system/k3s.service; disabled; preset: disabled)

Active: active (running) since Tue 2024-02-06 12:54:57 CET; 16min ago

Docs: https://k3s.io

Process: 12483 ExecStartPre=/sbin/modprobe br_netfilter (code=exited, status=0/SUCCESS)

Process: 12484 ExecStartPre=/sbin/modprobe overlay (code=exited, status=0/SUCCESS)

Main PID: 12485 (k3s-server)

Tasks: 207

Memory: 860.4M

CPU: 3min 12.924s

CGroup: /system.slice/k3s.service

├─12485 "/usr/local/bin/k3s server"

├─12584 "containerd "

├─12810 /mnt/fast/ix-applications/k3s/data/57b473e21324ddd4e4d6824d4c3de8330433a7e6046df9f46dd0fd77184ca16d/bin/containerd-shim-runc-v2 -namespace k8s.io -id 71a08d8da2fa825ed1fd03b733bef5>

├─12861 /mnt/fast/ix-applications/k3s/data/57b473e21324ddd4e4d6824d4c3de8330433a7e6046df9f46dd0fd77184ca16d/bin/containerd-shim-runc-v2 -namespace k8s.io -id cb12137cf14d20ae984a755a880358>

├─13472 /mnt/fast/ix-applications/k3s/data/57b473e21324ddd4e4d6824d4c3de8330433a7e6046df9f46dd0fd77184ca16d/bin/containerd-shim-runc-v2 -namespace k8s.io -id 74ef7710b896c803aa61e151f577d6>

├─13618 /mnt/fast/ix-applications/k3s/data/57b473e21324ddd4e4d6824d4c3de8330433a7e6046df9f46dd0fd77184ca16d/bin/containerd-shim-runc-v2 -namespace k8s.io -id f97d941f8cd1b9bd13ac416e48bf9e>

├─13719 /mnt/fast/ix-applications/k3s/data/57b473e21324ddd4e4d6824d4c3de8330433a7e6046df9f46dd0fd77184ca16d/bin/containerd-shim-runc-v2 -namespace k8s.io -id a9578f5b1d2e2a050cf4cfa953467b>

├─13766 /mnt/fast/ix-applications/k3s/data/57b473e21324ddd4e4d6824d4c3de8330433a7e6046df9f46dd0fd77184ca16d/bin/containerd-shim-runc-v2 -namespace k8s.io -id 28415c03a0810d5a98316442033fdc>

├─13884 /mnt/fast/ix-applications/k3s/data/57b473e21324ddd4e4d6824d4c3de8330433a7e6046df9f46dd0fd77184ca16d/bin/containerd-shim-runc-v2 -namespace k8s.io -id a6a123137928086f9be3b0daf0d87c>

├─14127 /mnt/fast/ix-applications/k3s/data/57b473e21324ddd4e4d6824d4c3de8330433a7e6046df9f46dd0fd77184ca16d/bin/containerd-shim-runc-v2 -namespace k8s.io -id 71fa368acf7cadc92c9dc9e93e0f59>

├─14150 /mnt/fast/ix-applications/k3s/data/57b473e21324ddd4e4d6824d4c3de8330433a7e6046df9f46dd0fd77184ca16d/bin/containerd-shim-runc-v2 -namespace k8s.io -id 81f9a02e7ca520c8728a097c2938c3>

├─14336 /mnt/fast/ix-applications/k3s/data/57b473e21324ddd4e4d6824d4c3de8330433a7e6046df9f46dd0fd77184ca16d/bin/containerd-shim-runc-v2 -namespace k8s.io -id 246e99ca1575aa24394d17ea36d1c1>

├─14402 /mnt/fast/ix-applications/k3s/data/57b473e21324ddd4e4d6824d4c3de8330433a7e6046df9f46dd0fd77184ca16d/bin/containerd-shim-runc-v2 -namespace k8s.io -id ce8d1adeaaa278eb05d624cccd38c1>

├─15055 /mnt/fast/ix-applications/k3s/data/57b473e21324ddd4e4d6824d4c3de8330433a7e6046df9f46dd0fd77184ca16d/bin/containerd-shim-runc-v2 -namespace k8s.io -id 91b057fd1c7c7eb18b3c0360711fc1>

└─15385 /mnt/fast/ix-applications/k3s/data/57b473e21324ddd4e4d6824d4c3de8330433a7e6046df9f46dd0fd77184ca16d/bin/containerd-shim-runc-v2 -namespace k8s.io -id 8848bed8513c6c046a7cb9599d18ce>

Feb 06 13:07:04 nas k3s[12485]: {"level":"warn","ts":"2024-02-06T13:07:04.711+0100","logger":"etcd-client","caller":"v3@v3.5.7-k3s1/retry_interceptor.go:62","msg":"retrying of unary invoker failed","ta>

Feb 06 13:07:35 nas k3s[12485]: {"level":"warn","ts":"2024-02-06T13:07:35.997+0100","logger":"etcd-client","caller":"v3@v3.5.7-k3s1/retry_interceptor.go:62","msg":"retrying of unary invoker failed","ta>

Feb 06 13:08:17 nas k3s[12485]: {"level":"warn","ts":"2024-02-06T13:08:17.880+0100","logger":"etcd-client","caller":"v3@v3.5.7-k3s1/retry_interceptor.go:62","msg":"retrying of unary invoker failed","ta>

Feb 06 13:08:57 nas k3s[12485]: {"level":"warn","ts":"2024-02-06T13:08:57.334+0100","logger":"etcd-client","caller":"v3@v3.5.7-k3s1/retry_interceptor.go:62","msg":"retrying of unary invoker failed","ta>

Feb 06 13:09:33 nas k3s[12485]: {"level":"warn","ts":"2024-02-06T13:09:33.934+0100","logger":"etcd-client","caller":"v3@v3.5.7-k3s1/retry_interceptor.go:62","msg":"retrying of unary invoker failed","ta>

Feb 06 13:09:53 nas k3s[12485]: time="2024-02-06T13:09:53+01:00" level=info msg="COMPACT compactRev=3588 targetCompactRev=4259 currentRev=5259"

Feb 06 13:09:53 nas k3s[12485]: time="2024-02-06T13:09:53+01:00" level=info msg="COMPACT deleted 671 rows from 671 revisions in 25.945728ms - compacted to 4259/5259"

Feb 06 13:10:12 nas k3s[12485]: {"level":"warn","ts":"2024-02-06T13:10:12.839+0100","logger":"etcd-client","caller":"v3@v3.5.7-k3s1/retry_interceptor.go:62","msg":"retrying of unary invoker failed","ta>

Feb 06 13:10:43 nas k3s[12485]: {"level":"warn","ts":"2024-02-06T13:10:43.396+0100","logger":"etcd-client","caller":"v3@v3.5.7-k3s1/retry_interceptor.go:62","msg":"retrying of unary invoker failed","ta>

Feb 06 13:11:19 nas k3s[12485]: {"level":"warn","ts":"2024-02-06T13:11:19.564+0100","logger":"etcd-client","caller":"v3@v3.5.7-k3s1/retry_interceptor.go:62","msg":"retrying of unary invoker failed","ta>I'd appreaciate any ideas, because I can't see what could be going on.