DAXQ

Contributor

- Joined

- Sep 5, 2014

- Messages

- 106

Morning all - I have a FreeNAS build - older IBM

OS Version: FreeNAS-11.3-U1

Model: System x3650 M3 -[7945AC1]-

Memory: 24 GiB

6 * HGST Travelstar 7K1000 2.5-Inch 1TB 7200 RPM SATA III 32MB Cache Internal Hard Drive

Set up in RaidZ3 Pool 5 Disks 1 Spare.

---

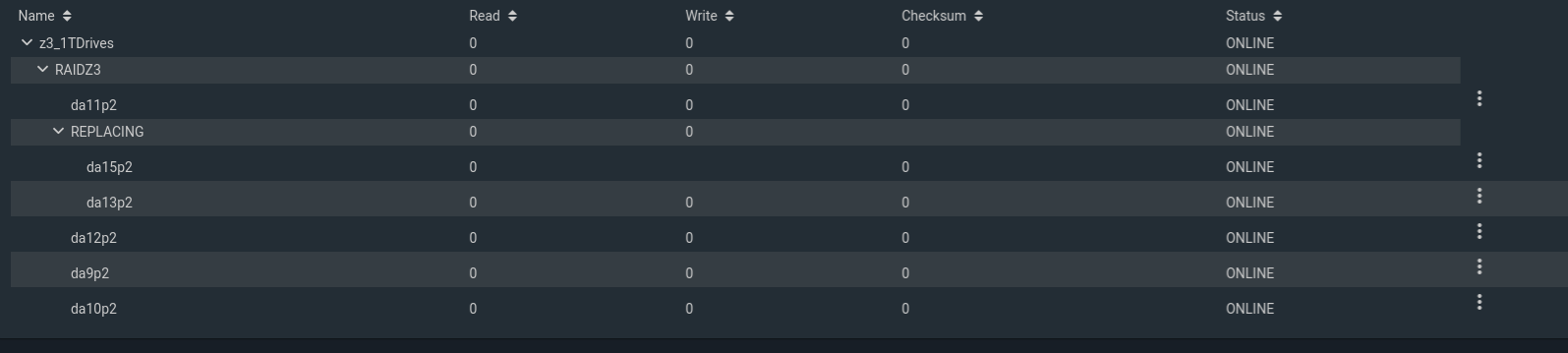

One of the disks in pool started giving me errors - failed smart, currently unreadable, etc... so I decided to replace it. Since my 6th drive was in the server & online (not in use) - I tried replacing the failing disk : I went to Storage > Pools > Gear > Status - clicked the three vertical dots and selected replace. In the dialog I selected my 6th disk from the pull down and clicked replace.

System started Replacing:

That was 7 days ago.

zpool status -x

pool: z3_1TDrives

state: ONLINE

status: One or more devices is currently being resilvered. The pool will

continue to function, possibly in a degraded state.

action: Wait for the resilver to complete.

scan: resilver in progress since Thu Dec 24 08:08:36 2020

2.02T scanned at 669M/s, 243G issued at 78.5M/s, 2.70T total

47.4G resilvered, 8.77% done, 0 days 09:08:55 to go

config:

NAME STATE READ WRITE CKSUM

z3_1TDrives ONLINE 0 0 0

raidz3-0 ONLINE 0 0 0

gptid/804a0592-62f6-11ea-9204-5cf3fcba544c ONLINE 0 0 0

replacing-1 ONLINE 0 0 12

gptid/806a11e8-62f6-11ea-9204-5cf3fcba544c ONLINE 0 330 0

gptid/10d452b4-43ba-11eb-8926-5cf3fcba544c ONLINE 0 0 0

gptid/81f193c9-62f6-11ea-9204-5cf3fcba544c ONLINE 0 0 0

gptid/818034fa-62f6-11ea-9204-5cf3fcba544c ONLINE 0 0 0

gptid/8267798a-62f6-11ea-9204-5cf3fcba544c ONLINE 0 0 0

errors: No known data errors

It just keeps looping - it will get to differing amounts done - goes up in G resilvered and down in G resilvered but never finishes.

I have a second pool of smaller drives in the same server so I am currently in the process of copying my data to the second pool, expecting i'm going to have to destroy this pool and rebuild it without the bad drive (if that's my only option). I was wondering if anyone had an idea what may have happened, if I did something incorrectly or if there is a better way to resolve this issue without having to completely rebuild?

Thanks in advance for any advice.

OS Version: FreeNAS-11.3-U1

Model: System x3650 M3 -[7945AC1]-

Memory: 24 GiB

6 * HGST Travelstar 7K1000 2.5-Inch 1TB 7200 RPM SATA III 32MB Cache Internal Hard Drive

Set up in RaidZ3 Pool 5 Disks 1 Spare.

---

One of the disks in pool started giving me errors - failed smart, currently unreadable, etc... so I decided to replace it. Since my 6th drive was in the server & online (not in use) - I tried replacing the failing disk : I went to Storage > Pools > Gear > Status - clicked the three vertical dots and selected replace. In the dialog I selected my 6th disk from the pull down and clicked replace.

System started Replacing:

That was 7 days ago.

zpool status -x

pool: z3_1TDrives

state: ONLINE

status: One or more devices is currently being resilvered. The pool will

continue to function, possibly in a degraded state.

action: Wait for the resilver to complete.

scan: resilver in progress since Thu Dec 24 08:08:36 2020

2.02T scanned at 669M/s, 243G issued at 78.5M/s, 2.70T total

47.4G resilvered, 8.77% done, 0 days 09:08:55 to go

config:

NAME STATE READ WRITE CKSUM

z3_1TDrives ONLINE 0 0 0

raidz3-0 ONLINE 0 0 0

gptid/804a0592-62f6-11ea-9204-5cf3fcba544c ONLINE 0 0 0

replacing-1 ONLINE 0 0 12

gptid/806a11e8-62f6-11ea-9204-5cf3fcba544c ONLINE 0 330 0

gptid/10d452b4-43ba-11eb-8926-5cf3fcba544c ONLINE 0 0 0

gptid/81f193c9-62f6-11ea-9204-5cf3fcba544c ONLINE 0 0 0

gptid/818034fa-62f6-11ea-9204-5cf3fcba544c ONLINE 0 0 0

gptid/8267798a-62f6-11ea-9204-5cf3fcba544c ONLINE 0 0 0

errors: No known data errors

It just keeps looping - it will get to differing amounts done - goes up in G resilvered and down in G resilvered but never finishes.

I have a second pool of smaller drives in the same server so I am currently in the process of copying my data to the second pool, expecting i'm going to have to destroy this pool and rebuild it without the bad drive (if that's my only option). I was wondering if anyone had an idea what may have happened, if I did something incorrectly or if there is a better way to resolve this issue without having to completely rebuild?

Thanks in advance for any advice.