Since finding out I should be using ZVOL based iSCSI LUNs for my VMs instead of file extents, I've been running into issues making the switch

For one, the FN server spontaneously reboots during heavy I/O. It may be a hardware issue on my end, but just curious if anyone else has run into the same problem??

Second is more confusing. Why isn't the RRD graph reporting iSCSI traffic when it's on a ZVOL, but does when it's file extent based LUNs?

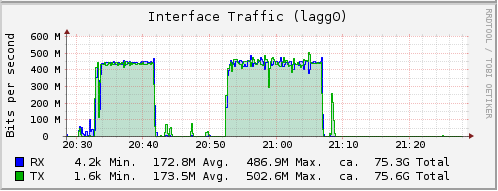

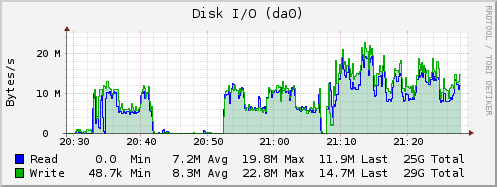

Attached are the two graphs during my migration from a file extent LUN to ZVOL. You can see from the disk graph that the drives are still being written to, but the network graph shows ZERO TRAFFIC

Network traffic is network traffic, no? Why should it matter if I'm accessing a block device versus a file???

For one, the FN server spontaneously reboots during heavy I/O. It may be a hardware issue on my end, but just curious if anyone else has run into the same problem??

Second is more confusing. Why isn't the RRD graph reporting iSCSI traffic when it's on a ZVOL, but does when it's file extent based LUNs?

Attached are the two graphs during my migration from a file extent LUN to ZVOL. You can see from the disk graph that the drives are still being written to, but the network graph shows ZERO TRAFFIC

Network traffic is network traffic, no? Why should it matter if I'm accessing a block device versus a file???

Last edited: