thirdgen89gta

Dabbler

- Joined

- May 5, 2014

- Messages

- 32

EDIT, I appologize, I accidentally posted this into the wrong sub-forum. If any mods want to move it to the General questions forum I am okay with that.

Oddball question and I have been searching for about 30 minutes now reading through various threads on the internet and I haven't seen this question pop-up before. It tends to get buried in the L2ARC and SLOG threads.

Example for my server.

I have a 2 pools in my FreeNAS server.

Pool 1, named "Loki" is 6x 256GB SSDs in RAIDZ1. Its used for smaller, personal files. I store my TimeMachine backups on it, along with personal files (Taxes, Pictures, boring stuffs). I also store all of my software installers there I've accumulated over the years. It also hosts my Jails and the Plex Media Server databases. Aside from the Plex Database and Jail stuff, its really not accessed all that often. Its usually write once, and read rarely. I only used RaidZ1 to get more storage out of the more expensive SSD storage. I have complete backup of all the important stuff from this pool existing in google drive, and its also on other machines thats to Google Drive Backup & Sync.

Pool 2, named Odin is 6x 8TB drives in RAIDZ2, this is where I store Movies, TV Series..etc. I store the movies in full quality rips, I don't transcode. So this means the average blu-ray rip is sucking up 20GB, and the 4K stuff is averaging about 50GB per movie. TV shows tend to be about 6-10GB per episode for a 1 hour format show.

As my server has only 32GB of memory, I was kind of thinking that because Loki is made purely of 256GB SSDs, I may not need ARC on it. Has anyone else looked at how effective ARC is when its caching from a SSD based pool? With SSD's random read speed compared to a Spinny drive I'm wondering if maybe the ARC would be better served if I tell ZFS not to cache the SSD pool stuff into ARC and instead let it dedicate the ARC to the platter based pool.

So my what I'd like to know is if other users have disabled ARC on an SSD Pool so it can devote more of the ARC resources to the slower spinning disk pools.

__________

As a side note, I decided to go experimenting last week and added both a SLOG and L2ARC to the Platter pool. As expected when I set SYNC=Always the write performance went down the toilet. It wasn't horrible, but certainly the SSD I had available for it just wasn't good enough to handle SLOG duties. While its still attached to the pool, I have SYNC=Standard right now and when copying files its really not using the SLOG anymore. I'll probably remove it from the pool this week.

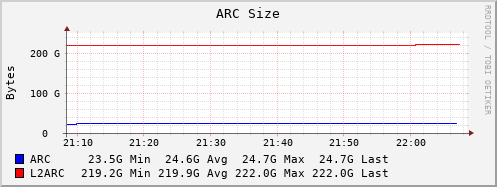

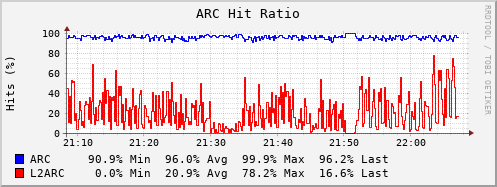

For my second experiment I added a 256GB SSD as a L2ARC to the 6x8TB RaidZ2 pool. I expected that my ARC would go to crap because I only have 32GB. Instead, I'm finding that my ARC hit-rate has barely dropped, and the L2ARC is actually getting some hits, though so-far the hit-rate is terrible. I thought that when I added the L2ARC it would cause my the ARC hit-rate to drop dramatically as it was storing tables for the L2ARC, but thats not what is happening. Maybe the L2ARC hit-rate will go up as it learns, and maybe as it learns it will impact ARC and cause more ARC misses. Its no big deal to remove the L2ARC from the pool, so it doesn't hurt to experiment with it.

I pulled some stats for people to look over if they are interested and tell me how wrong I am, or that maybe I'm reading it right and the L2ARC is just not hurting my ARC that much because of the way I used the NAS.

___________

_______

ZFS Stats.

Oddball question and I have been searching for about 30 minutes now reading through various threads on the internet and I haven't seen this question pop-up before. It tends to get buried in the L2ARC and SLOG threads.

Example for my server.

I have a 2 pools in my FreeNAS server.

Pool 1, named "Loki" is 6x 256GB SSDs in RAIDZ1. Its used for smaller, personal files. I store my TimeMachine backups on it, along with personal files (Taxes, Pictures, boring stuffs). I also store all of my software installers there I've accumulated over the years. It also hosts my Jails and the Plex Media Server databases. Aside from the Plex Database and Jail stuff, its really not accessed all that often. Its usually write once, and read rarely. I only used RaidZ1 to get more storage out of the more expensive SSD storage. I have complete backup of all the important stuff from this pool existing in google drive, and its also on other machines thats to Google Drive Backup & Sync.

Pool 2, named Odin is 6x 8TB drives in RAIDZ2, this is where I store Movies, TV Series..etc. I store the movies in full quality rips, I don't transcode. So this means the average blu-ray rip is sucking up 20GB, and the 4K stuff is averaging about 50GB per movie. TV shows tend to be about 6-10GB per episode for a 1 hour format show.

As my server has only 32GB of memory, I was kind of thinking that because Loki is made purely of 256GB SSDs, I may not need ARC on it. Has anyone else looked at how effective ARC is when its caching from a SSD based pool? With SSD's random read speed compared to a Spinny drive I'm wondering if maybe the ARC would be better served if I tell ZFS not to cache the SSD pool stuff into ARC and instead let it dedicate the ARC to the platter based pool.

So my what I'd like to know is if other users have disabled ARC on an SSD Pool so it can devote more of the ARC resources to the slower spinning disk pools.

__________

As a side note, I decided to go experimenting last week and added both a SLOG and L2ARC to the Platter pool. As expected when I set SYNC=Always the write performance went down the toilet. It wasn't horrible, but certainly the SSD I had available for it just wasn't good enough to handle SLOG duties. While its still attached to the pool, I have SYNC=Standard right now and when copying files its really not using the SLOG anymore. I'll probably remove it from the pool this week.

For my second experiment I added a 256GB SSD as a L2ARC to the 6x8TB RaidZ2 pool. I expected that my ARC would go to crap because I only have 32GB. Instead, I'm finding that my ARC hit-rate has barely dropped, and the L2ARC is actually getting some hits, though so-far the hit-rate is terrible. I thought that when I added the L2ARC it would cause my the ARC hit-rate to drop dramatically as it was storing tables for the L2ARC, but thats not what is happening. Maybe the L2ARC hit-rate will go up as it learns, and maybe as it learns it will impact ARC and cause more ARC misses. Its no big deal to remove the L2ARC from the pool, so it doesn't hurt to experiment with it.

I pulled some stats for people to look over if they are interested and tell me how wrong I am, or that maybe I'm reading it right and the L2ARC is just not hurting my ARC that much because of the way I used the NAS.

___________

Code:

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

Loki 1.38T 868G 548G - - 6% 61% 1.00x ONLINE /mnt

raidz1 1.38T 868G 548G - - 6% 61%

gptid/cbdb1b59-ea49-11e8-827f-408d5cb9e8ed - - - - - - -

gptid/cc3a233b-ea49-11e8-827f-408d5cb9e8ed - - - - - - -

gptid/cc90bda8-ea49-11e8-827f-408d5cb9e8ed - - - - - - -

gptid/ccf18666-ea49-11e8-827f-408d5cb9e8ed - - - - - - -

gptid/cd539d8c-ea49-11e8-827f-408d5cb9e8ed - - - - - - -

gptid/cdad167f-ea49-11e8-827f-408d5cb9e8ed - - - - - - -

Odin 43.5T 27.5T 16.0T - - 0% 63% 1.00x ONLINE /mnt

raidz2 43.5T 27.5T 16.0T - - 0% 63%

gptid/ce154254-811b-11e8-8fe5-408d5cb9e8ed - - - - - - -

gptid/cec15941-811b-11e8-8fe5-408d5cb9e8ed - - - - - - -

gptid/cf690916-811b-11e8-8fe5-408d5cb9e8ed - - - - - - -

gptid/d0128439-811b-11e8-8fe5-408d5cb9e8ed - - - - - - -

gptid/d1527ef9-811b-11e8-8fe5-408d5cb9e8ed - - - - - - -

gptid/d1fece30-811b-11e8-8fe5-408d5cb9e8ed - - - - - - -

log - - - - - -

da5 238G 0 238G - - 0% 0%

cache - - - - - -

da14 233G 219G 13.5G - - 0% 94%

_______

ZFS Stats.

Code:

ZFS Subsystem Report Mon Dec 3 21:54:39 2018

------------------------------------------------------------------------

ARC Misc:

Deleted: 13857380

Recycle Misses: 0

Mutex Misses: 12720

Evict Skips: 12720

ARC Size:

Current Size (arcsize): 86.63% 25305.80M

Target Size (Adaptive, c): 86.62% 25303.89M

Min Size (Hard Limit, c_min): 13.13% 3837.03M

Max Size (High Water, c_max): ~7:1 29209.87M

ARC Size Breakdown:

Recently Used Cache Size (p): 48.02% 12153.49M

Frequently Used Cache Size (arcsize-p): 51.97% 13152.30M

ARC Hash Breakdown:

Elements Max: 2406551

Elements Current: 96.77% 2328900

Collisions: 14013052

Chain Max: 0

Chains: 450605

ARC Eviction Statistics:

Evicts Total: 1006527600128

Evicts Eligible for L2: 95.05% 956788835840

Evicts Ineligible for L2: 4.94% 49738764288

Evicts Cached to L2: 915848913920

ARC Efficiency

Cache Access Total: 1864707625

Cache Hit Ratio: 97.15% 1811642854

Cache Miss Ratio: 2.84% 53064771

Actual Hit Ratio: 97.08% 1810307057

Data Demand Efficiency: 99.28%

Data Prefetch Efficiency: 21.26%

CACHE HITS BY CACHE LIST:

Most Recently Used (mru): 2.98% 54062632

Most Frequently Used (mfu): 96.94% 1756244425

MRU Ghost (mru_ghost): 0.03% 709024

MFU Ghost (mfu_ghost): 0.03% 652236

CACHE HITS BY DATA TYPE:

Demand Data: 11.97% 216947014

Prefetch Data: 0.14% 2714010

Demand Metadata: 87.85% 1591608776

Prefetch Metadata: 0.02% 373054

CACHE MISSES BY DATA TYPE:

Demand Data: 2.94% 1562902

Prefetch Data: 18.94% 10051593

Demand Metadata: 76.73% 40719826

Prefetch Metadata: 1.37% 730450

------------------------------------------------------------------------

L2 ARC Summary:

Low Memory Aborts: 0

R/W Clashes: 0

Free on Write: 36954

L2 ARC Size:

Current Size: (Adaptive) 225946.18M

Header Size: 0.06% 144.84M

L2 ARC Evicts:

Lock Retries: 93

Upon Reading: 0

L2 ARC Read/Write Activity:

Bytes Written: 563159.28M

Bytes Read: 319988.39M

L2 ARC Breakdown:

Access Total: 35359754

Hit Ratio: 7.40% 2619342

Miss Ratio: 92.59% 32740412

Feeds: 718345

WRITES:

Sent Total: 100.00% 124466

------------------------------------------------------------------------

Last edited: