Emile.Belcourt

Dabbler

- Joined

- Feb 16, 2021

- Messages

- 23

Hello all,

I've had this issue twice now in the past month and I think I'm going to pre-emptively cycle around some disks as quite a few of my disks are from the same build date, but back to the issue. I first get an alarm that a disk is causing degraded IO performance:

This error (and the one on the previous occasion) preceded an irrecoverable error five minutes later:

In this situation, all service activity continues fine and I prepare to replace the disk, crack out a new one and get it in its sled. I aim to go through the recommended procedure which is to OFFLINE the disk ready for replacement, eject the disk, put in new one, go to replace in the pool for the failed disk, it's replaced and resilvering starts no problem.

However, I press OFFLINE and it just hangs with the twirly gig with no response from the console (directly), via SSH or via the webdmin. I cannot get any response from anything relying on middlewared (which is practically everything) and no zpool status information.

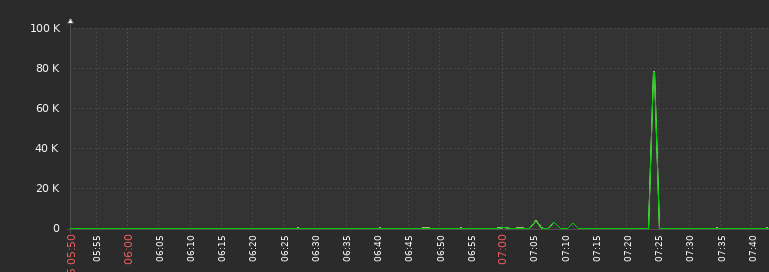

I then notice I start getting Zabbix monitoring outage failures for my VMWare Cluster where VMs are no longer responding to heartbeat messages, I can initiate an RDP session but cannot complete the connection. Then I notice the ESXi disk monitoring showing *Zero* activity meaning the iSCSI link had stopped responding, it was active but nothing was going in or out. The latency briefly spike to ~80,000ms and then nothing:

The fluctuation at 07:00 is when the storage server stated it failed to read SMART values from the failing disk:

The first time this issue happened, I went to the storage server and all but one of the disk activity lights were solid and I could hear every disk running at full pelt but then the disk that had failed was dark. So I broke procedure and yanked the disk, all activity returned to normal, the storage server unlocked itself and all services returned to normal.

This time, I was not in the office and I just had to wait it out and after half an hour i got:

Attached are excerpts of the log data at the time of attempting to OFFLINE the faulting disk:

To summarise all of the above:

Why is OFFLINE-ing a failed/degraded disking causing the iSCSI service to stop responding and subsequently cause issues to our VMWare server? This time, it corrupted a VM and thankfully no changes had been made since the 4am backup so I was able to restore it without any service disruption but it's still highly concerning. It could be I'm asking the wrong question and OFFLINE-ing the disk was not the cause, only part of the symptomatic issues with the disk array going unhealthy, but it's too coincidental that on two occasions a disk degrades, faults, I OFFLINE it and then iSCSI (and the TrueNAS as well) goes unresponsive and causes issues for the virtual host.

Hopefully I've managed to provide enough information to help with analysis! :)

Many thanks,

Emile

I've had this issue twice now in the past month and I think I'm going to pre-emptively cycle around some disks as quite a few of my disks are from the same build date, but back to the issue. I first get an alarm that a disk is causing degraded IO performance:

Device /dev/gptid/ff61160b-72c0-11eb-8b6f-0cc47a63b90c is causing slow I/O on pool Warm_HDD_Pool

This error (and the one on the previous occasion) preceded an irrecoverable error five minutes later:

Pool Warm_HDD_Pool state is ONLINE: One or more devices has experienced an unrecoverable error. An attempt was made to correct the error. Applications are unaffected

In this situation, all service activity continues fine and I prepare to replace the disk, crack out a new one and get it in its sled. I aim to go through the recommended procedure which is to OFFLINE the disk ready for replacement, eject the disk, put in new one, go to replace in the pool for the failed disk, it's replaced and resilvering starts no problem.

However, I press OFFLINE and it just hangs with the twirly gig with no response from the console (directly), via SSH or via the webdmin. I cannot get any response from anything relying on middlewared (which is practically everything) and no zpool status information.

I then notice I start getting Zabbix monitoring outage failures for my VMWare Cluster where VMs are no longer responding to heartbeat messages, I can initiate an RDP session but cannot complete the connection. Then I notice the ESXi disk monitoring showing *Zero* activity meaning the iSCSI link had stopped responding, it was active but nothing was going in or out. The latency briefly spike to ~80,000ms and then nothing:

The fluctuation at 07:00 is when the storage server stated it failed to read SMART values from the failing disk:

Device: /dev/da3, failed to read SMART values.

The first time this issue happened, I went to the storage server and all but one of the disk activity lights were solid and I could hear every disk running at full pelt but then the disk that had failed was dark. So I broke procedure and yanked the disk, all activity returned to normal, the storage server unlocked itself and all services returned to normal.

This time, I was not in the office and I just had to wait it out and after half an hour i got:

Services had returned to normal but the UI still said the disk was ONLINE. At this point I left it till I was in the office so I could prepare the replacement disk. I mistakenly detached instead of replaced but either way I was able to get the vDev back into a mirror no problem and it started resilvering.* smartd is not running.

* Pool Warm_HDD_Pool state is DEGRADED: One or more devices has experienced an unrecoverable error. An attempt was made to correct the error. Applications are unaffected.

The following devices are not healthy:

- Disk SEAGATE ST4000NM0023 Z1Z8GHLL0000C536E5P6 is OFFLINE

Attached are excerpts of the log data at the time of attempting to OFFLINE the faulting disk:

- daemon.log - This one starts with making a casefile very early on but after messages exhibits iSCSI errors

- messages - This log is the most damning and very early on the iSCSI service is detecting write errors:

May 25 06:41:58 stor-01.domain.com (da3:mpr0:0:11:0): WRITE(10). CDB: 2a 00 47 63 40 28 00 00 20 00

May 25 06:41:58 stor-01.domain.com (da3:mpr0:0:11:0): CAM status: SCSI Status Error

May 25 06:41:58 stor-01.domain.com (da3:mpr0:0:11:0): SCSI status: Check Condition

May 25 06:41:58 stor-01.domain.com (da3:mpr0:0:11:0): SCSI sense: HARDWARE FAILURE asc:3,0 (Peripheral device write fault)

May 25 06:41:58 stor-01.domain.com (da3:mpr0:0:11:0): Info: 0x47634028

May 25 06:41:58 stor-01.domain.com (da3:mpr0:0:11:0): Field Replaceable Unit: 8

May 25 06:41:58 stor-01.domain.com (da3:mpr0:0:11:0): Command Specific Info: 0x7004f00

May 25 06:41:58 stor-01.domain.com (da3:mpr0:0:11:0): Actual Retry Count: 23

May 25 06:41:58 stor-01.domain.com (da3:mpr0:0:11:0): Descriptor 0x80: 00 00 00 00 00 00 00 00 00 00 00 00 00 00

May 25 06:41:58 stor-01.domain.com (da3:mpr0:0:11:0): Retrying command (per sense data)

May 25 06:42:01 stor-01.domain.com (da3:mpr0:0:11:0): WRITE(10). CDB: 2a 00 47 63 40 28 00 00 20 00

May 25 06:42:01 stor-01.domain.com (da3:mpr0:0:11:0): CAM status: SCSI Status Error

May 25 06:42:01 stor-01.domain.com (da3:mpr0:0:11:0): SCSI status: Check Condition

May 25 06:42:01 stor-01.domain.com (da3:mpr0:0:11:0): SCSI sense: HARDWARE FAILURE asc:3,0 (Peripheral device write fault)

May 25 06:42:01 stor-01.domain.com (da3:mpr0:0:11:0): Info: 0x47634028

May 25 06:42:01 stor-01.domain.com (da3:mpr0:0:11:0): Field Replaceable Unit: 8

May 25 06:42:01 stor-01.domain.com (da3:mpr0:0:11:0): Command Specific Info: 0x7004f00

May 25 06:42:01 stor-01.domain.com (da3:mpr0:0:11:0): Actual Retry Count: 23

May 25 06:42:01 stor-01.domain.com (da3:mpr0:0:11:0): Descriptor 0x80: 00 00 00 00 00 00 00 00 00 00 00 00 00 00

May 25 06:42:01 stor-01.domain.com (da3:mpr0:0:11:0): Retrying command (per sense data)

- middlewared.log - doesn't look like anything special except for webmin/socket errors because everything was getting locked up

- X10DRU-i+

- Dual Xeon e5-2620v3

- 128GB DDR4-2166 ECC

- LSI 3008 HBA

- SAS 6gbps backplane (i know, it's a pita)

- 12x ES.3 4TB SAS HDDs in 6 vdev stripe of mirrored pairs

- 16GB Optane SLOG

- 2TB NVMe Cache

- TrueNAS Core 12.0-U8

pool: Warm_HDD_Pool

state: ONLINE

status: One or more devices is currently being resilvered. The pool will

continue to function, possibly in a degraded state.

action: Wait for the resilver to complete.

scan: resilver in progress since Wed May 25 10:04:20 2022

11.8T scanned at 1.43G/s, 9.78T issued at 1.19G/s, 11.8T total

15.4G resilvered, 83.16% done, 00:28:22 to go

config:

NAME STATE READ WRITE CKSUM

Warm_HDD_Pool ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

gptid/005c7367-72c1-11eb-8b6f-0cc47a63b90c ONLINE 0 0 0

gptid/01411de8-72c1-11eb-8b6f-0cc47a63b90c ONLINE 0 0 0

mirror-1 ONLINE 0 0 0

gptid/00176797-72c1-11eb-8b6f-0cc47a63b90c ONLINE 0 0 0

gptid/a83c83bb-dc09-11ec-8b33-0cc47a63b90c ONLINE 0 0 0 (resilvering)

mirror-2 ONLINE 0 0 0

gptid/01abc09b-72c1-11eb-8b6f-0cc47a63b90c ONLINE 0 0 0

gptid/01d48b31-72c1-11eb-8b6f-0cc47a63b90c ONLINE 0 0 0

mirror-3 ONLINE 0 0 0

gptid/7bf2ef84-d05d-11ec-8b33-0cc47a63b90c ONLINE 0 0 0

gptid/017356ce-72c1-11eb-8b6f-0cc47a63b90c ONLINE 0 0 0

mirror-4 ONLINE 0 0 0

gptid/017ddc83-72c1-11eb-8b6f-0cc47a63b90c ONLINE 0 0 0

gptid/01a302f7-72c1-11eb-8b6f-0cc47a63b90c ONLINE 0 0 0

mirror-5 ONLINE 0 0 0

gptid/02634146-72c1-11eb-8b6f-0cc47a63b90c ONLINE 0 0 0

gptid/9690208f-478c-11ec-9686-0cc47a63b90c ONLINE 0 0 0

logs

gptid/0152abbd-72c1-11eb-8b6f-0cc47a63b90c ONLINE 0 0 0

cache

gptid/026cf090-72c1-11eb-8b6f-0cc47a63b90c ONLINE 0 0 0

errors: No known data errors

To summarise all of the above:

Why is OFFLINE-ing a failed/degraded disking causing the iSCSI service to stop responding and subsequently cause issues to our VMWare server? This time, it corrupted a VM and thankfully no changes had been made since the 4am backup so I was able to restore it without any service disruption but it's still highly concerning. It could be I'm asking the wrong question and OFFLINE-ing the disk was not the cause, only part of the symptomatic issues with the disk array going unhealthy, but it's too coincidental that on two occasions a disk degrades, faults, I OFFLINE it and then iSCSI (and the TrueNAS as well) goes unresponsive and causes issues for the virtual host.

Hopefully I've managed to provide enough information to help with analysis! :)

Many thanks,

Emile