beaster

Dabbler

- Joined

- May 17, 2021

- Messages

- 27

I have a working Truenas 12.0U3 installation.

The installation has as follows

(note I used this reference documentation https://www.truenas.com/community/threads/how-to-setup-vlans-within-freenas-11-3.81633/ )

Switch => LAG (gig24 + gig 25) => Trunk vlan 1-1000 => TRUENAS Server

VLAN 1 is the native VLAN on the trunk (untagged)

VLAN 1 has no IP information on the switch side

VLAN 1 has no IP information on the TRUENAS server side

VLAN 3 is tagged on the TRUNK

VLAN 3 has a /24 IP interface on the switch side

VLAN 3 has the management interface for the TRUENAS Server and default gateway as well as a summary route 10/8 to the same gateway

I have a Firewall VM operating with 3 NIC interfaces mapped to 3 Bridges on the TRUENAS SERVER

The PFSense firewall has Bridge 400 (WAN), BRIDGE 2 (LAN) and BRIDGE 12 (DMZ)

The firewall works perfectly well in this setup

The TRUENAS Server a TRUNK LAG with 6 VLANs on it.

The TRUENAS Server has Bridge 400 mapped to VLAN 400 on the LAG

VLAN Tag: 400 VLAN Parent Interface: lagg0

The TRUENAS Server has Bridge 2 mapped to VLAN 2 on the LAG

VLAN Tag: 2 VLAN Parent Interface: lagg0

The TRUENAS Server has Bridge 12 mapped to VLAN 12 on the LAG

VLAN Tag: 12 VLAN Parent Interface: lagg0

I also have

The TRUENAS Server has Bridge 14 mapped to VLAN 14 on the LAG

VLAN Tag: 14 VLAN Parent Interface: lagg0

VLAN 14 has no ip address initially, however I want to add a locally specific one to that VLAN for LAN local ARP and IP traffic.

If I try to add an IP Address to the TRUENAS server to more than 1 VLAN (in this case VLAN 3 is the first IP address)

In this case I tried to add an IP address to VLAN 14 interface

Then after committing the changes the switch/server connection destabilizes.

I loose all connections other than the one to the TRUENAS Server on VLAN 3 and I suspect this is because the ARP is still cached on the switch.

The IP Switch in this case a Meraki device, looses all other connectivity to the firewall and other hosts on all other VLANs

The only thing that I can see that is immediately obvious is that all the VLAN IP interfaces on the TRUENAS server have the same MAC address.

However the Bridge Interfaces have unique MAC addresses for each Bridge Interface.

What I noted when I added the VLAN IP address for VLAN 14 was that MAC address was same for VLAN 14 as VLAN 3 with the new IP address for the TRUENAS

However the whole setup failed shortly afterwards.

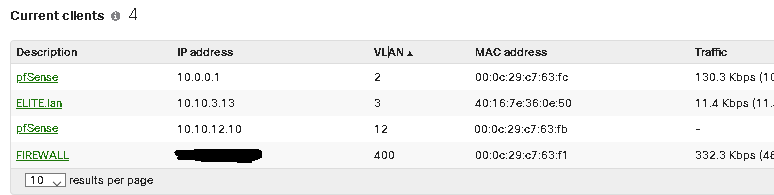

The clients table for the port shows the following for a short time.

IP address 10.10.3.13 on VLAN 3 mac address 40:16:7e:36:0e:50

IP address 10.10.14.2 on VLAN 14 mac address 40:16:7e:36:0e:50

I am wondering if the TRUENAS Server is the issue here.

My Question is as follows

I want to setup an IP on other VLAN's to talk locally to that subnet (ie no use the default gateway/route)

When adding 2nd local IP address for a VLAN, should I be adding that IP address to

a) The Bridge 14 Interface (which has a unique MAC)

or

b) The VLAN 14 Interface. (which has a shared MAC)

or

c) I should be investigating Jails for this requirement

I am trying to figure how the TRUENAS deals with switching between the Interface VLAN and the BRIDGE and if that is even possible with the default packages/build in 12.0U3

my next option is to pull out a packet sniffer however if there is a quicker solution here I would be keen to test it out.

The installation has as follows

(note I used this reference documentation https://www.truenas.com/community/threads/how-to-setup-vlans-within-freenas-11-3.81633/ )

Switch => LAG (gig24 + gig 25) => Trunk vlan 1-1000 => TRUENAS Server

VLAN 1 is the native VLAN on the trunk (untagged)

VLAN 1 has no IP information on the switch side

VLAN 1 has no IP information on the TRUENAS server side

VLAN 3 is tagged on the TRUNK

VLAN 3 has a /24 IP interface on the switch side

VLAN 3 has the management interface for the TRUENAS Server and default gateway as well as a summary route 10/8 to the same gateway

I have a Firewall VM operating with 3 NIC interfaces mapped to 3 Bridges on the TRUENAS SERVER

The PFSense firewall has Bridge 400 (WAN), BRIDGE 2 (LAN) and BRIDGE 12 (DMZ)

The firewall works perfectly well in this setup

The TRUENAS Server a TRUNK LAG with 6 VLANs on it.

The TRUENAS Server has Bridge 400 mapped to VLAN 400 on the LAG

VLAN Tag: 400 VLAN Parent Interface: lagg0

The TRUENAS Server has Bridge 2 mapped to VLAN 2 on the LAG

VLAN Tag: 2 VLAN Parent Interface: lagg0

The TRUENAS Server has Bridge 12 mapped to VLAN 12 on the LAG

VLAN Tag: 12 VLAN Parent Interface: lagg0

I also have

The TRUENAS Server has Bridge 14 mapped to VLAN 14 on the LAG

VLAN Tag: 14 VLAN Parent Interface: lagg0

VLAN 14 has no ip address initially, however I want to add a locally specific one to that VLAN for LAN local ARP and IP traffic.

If I try to add an IP Address to the TRUENAS server to more than 1 VLAN (in this case VLAN 3 is the first IP address)

In this case I tried to add an IP address to VLAN 14 interface

Then after committing the changes the switch/server connection destabilizes.

I loose all connections other than the one to the TRUENAS Server on VLAN 3 and I suspect this is because the ARP is still cached on the switch.

The IP Switch in this case a Meraki device, looses all other connectivity to the firewall and other hosts on all other VLANs

The only thing that I can see that is immediately obvious is that all the VLAN IP interfaces on the TRUENAS server have the same MAC address.

However the Bridge Interfaces have unique MAC addresses for each Bridge Interface.

What I noted when I added the VLAN IP address for VLAN 14 was that MAC address was same for VLAN 14 as VLAN 3 with the new IP address for the TRUENAS

However the whole setup failed shortly afterwards.

The clients table for the port shows the following for a short time.

IP address 10.10.3.13 on VLAN 3 mac address 40:16:7e:36:0e:50

IP address 10.10.14.2 on VLAN 14 mac address 40:16:7e:36:0e:50

I am wondering if the TRUENAS Server is the issue here.

My Question is as follows

I want to setup an IP on other VLAN's to talk locally to that subnet (ie no use the default gateway/route)

When adding 2nd local IP address for a VLAN, should I be adding that IP address to

a) The Bridge 14 Interface (which has a unique MAC)

or

b) The VLAN 14 Interface. (which has a shared MAC)

or

c) I should be investigating Jails for this requirement

I am trying to figure how the TRUENAS deals with switching between the Interface VLAN and the BRIDGE and if that is even possible with the default packages/build in 12.0U3

my next option is to pull out a packet sniffer however if there is a quicker solution here I would be keen to test it out.