Apparently, I'm the jackass who sees the entire class waiting to walk in & tries to open the door anyway.

I thought I understood how to read hardware requirements & determine compatibility. I've connected 7 NVMe devices that were all x4 ... to an i7-8700K (which have only 16 PCIe lanes on the CPU). Granted, only 4 were on an x16 (HighPoint SSD7120) RAID array. Ultimately I rejected a consumer system (an i7-9800x (44 PCIe Lanes!) + a X299 (Gigabyte - Designare motherboard w. many x16 slots, integrated 10G-baseT & TB3) because it lacked ECC.

But when I decided on an R730xd (with 80 PCIe lanes on the CPU (E5-2600v3)) which seemed a good deal and more than enough lanes ... I thought it'd at least be adequate for ~12x NVMe drives. 80 lanes..?? How could it not..??

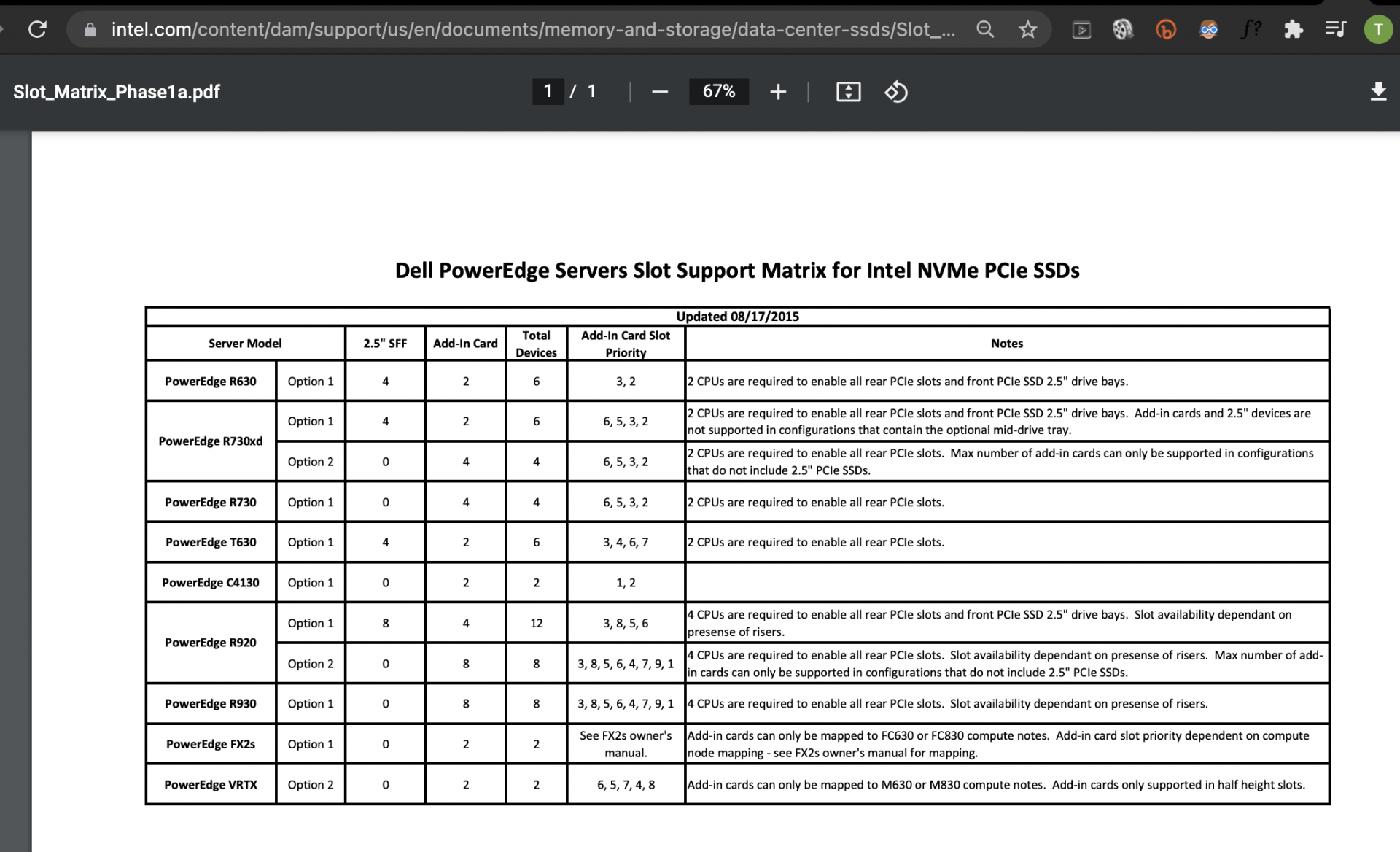

But Dell won't even sell you more than 4x NVMe (at their RIDICULOUSLY over-priced NVMe pricing) and Intel says the same thing; that you can only use 4x NVMe (this is a pic of Intel's statement) ... I'm willing to trust I'm wrong -- but is that the case..? Or is this a matter of them not preserving the access to the remaining HD slots or something?

Ironically, after almost a YEAR of tryina sell an R930, I finally sell it only to see it on this list in the exact role I wanted!

If not, why aren't 80 CPU -- PCIe lanes adequate for 12 NVMe drives..?

I thought I needed ~48 lanes -- (for a max of 12 NVMe devices, if not less).

If nothing else -- hopefully I at least got a decent deal on the R730XD (still in transit)

Hardware I either: have, am buying, or want (as deals come up).

Dell PowerEdge R730XD, 2P

FINALLY! Would you believe this took a while ??

I thought I understood how to read hardware requirements & determine compatibility. I've connected 7 NVMe devices that were all x4 ... to an i7-8700K (which have only 16 PCIe lanes on the CPU). Granted, only 4 were on an x16 (HighPoint SSD7120) RAID array. Ultimately I rejected a consumer system (an i7-9800x (44 PCIe Lanes!) + a X299 (Gigabyte - Designare motherboard w. many x16 slots, integrated 10G-baseT & TB3) because it lacked ECC.

But when I decided on an R730xd (with 80 PCIe lanes on the CPU (E5-2600v3)) which seemed a good deal and more than enough lanes ... I thought it'd at least be adequate for ~12x NVMe drives. 80 lanes..?? How could it not..??

But Dell won't even sell you more than 4x NVMe (at their RIDICULOUSLY over-priced NVMe pricing) and Intel says the same thing; that you can only use 4x NVMe (this is a pic of Intel's statement) ... I'm willing to trust I'm wrong -- but is that the case..? Or is this a matter of them not preserving the access to the remaining HD slots or something?

Ironically, after almost a YEAR of tryina sell an R930, I finally sell it only to see it on this list in the exact role I wanted!

If not, why aren't 80 CPU -- PCIe lanes adequate for 12 NVMe drives..?

I thought I needed ~48 lanes -- (for a max of 12 NVMe devices, if not less).

Task / Role | Quantity + Role | Notes: |

RAIDz-2 Array | 8x NVMe | |

Fusion Pool | 2 Optanes (mirrored) | (small files + MetaData) |

SLOG | Persistent NVDIMMs | Previously used an AIC - but to save space... |

Add RAM | It's everyone's advice anyway (& faster..?) |

I intend to upgrade to a dRAID array when TrueNAS supports it, as it rebuilds much faster.

Fusion Pool: (for small files + metadata). I need to calculate my existing zPool's size would be.

If nothing else -- hopefully I at least got a decent deal on the R730XD (still in transit)

Hardware I either: have, am buying, or want (as deals come up).

Dell PowerEdge R730XD, 2P

- Dual Xeons, E5-2600 v3 (upgradeable to v4)

- INSTALLED: E5-2640v3 Clock: 2.60 GHz Turbo: 3.40 GHz

- Not bad, but if SMB performance is based on single-core performance, I'll at least upgradeable to a faster clock or possibly a v4 with a faster clock...

- E5-2643v3 | SR204 (6c Base: 3.40 GHz Turbo: 3.70 GHz) for $120 ea

- E5-2667v3 | SR203 (8c Base: 3.20 GHz Turbo: 3.60 GHz) for $130 ea

- or - - E5-2643v4 | SR2P4 (6c Base: 3.40 GHz Turbo: 3.70 GHz) for $280 ea

- E5-2667v4 | SR2P5 (8c Base: 3.20 GHz Turbo: 3.60 GHz) for $220 ea

- RAM (ARC) / SLOG / Fusion Pool -- ANOTHER MISTAKE:

- To reduce NVMe devices, I'd hoped to use Optane P-DIMM (cheapest per GB Optane) but that'd require an SP / LGA 3647.

- So, more expensive (but ... faster?) the next option would be Persistent NVDIMM (at about $5 per GB I think).

- Using NVDIMMs as my SLOG would reduce ≤ 2 AIC (if the single Radian RMS-200 8GB) is too little & even ultimately slower.

- But, a Fusion Pool requires mirrored Optanes (to protect the zPool ): Either 905P, P4800X or 900P, @ ~380GB each.

- Seems like a real accomplishment to pick up any of those models for about $1 x GB, especially the smaller sizes.

- For conventional DDR4 ECC ... I plan to start with ~64GB (in 8GB modules) for ~ $200 which'd leave 16 memory slots free.

- HBA Controller:

- SuperMicro ReTimer -- AOC-SLG3-4E4T

- Networking:

- Chelsio: T520-SO-CR (SFP+)

FINALLY! Would you believe this took a while ??