TrueNAS Apps: Tutorials

Application maintenance is independent from TrueNAS version release cycles.

App versions, features, options, and installation behavior at time of access might vary from documented tutorials and UI reference.

Installing Custom Applications

30 minute read.

TrueNAS includes the ability to install third-party apps in Docker containers that allow users to deploy apps not included in the catalog. Generally, any container that follows the Open Container Initiative specifications can be deployed.

To deploy a custom application, go to Apps and click Discover Apps. Click Custom App to open the Install iX App screen with a guided installation wizard. Click more_vert > Install via YAML to open the Custom App screen with an advanced YAML editor for deploying apps using Docker Compose.

Custom Docker applications typically follow Open Container specifications and deploy in TrueNAS following the Custom Application deployment process described below.

Carefully review the documentation for the app you plan to install before attempting to install a custom app. Take note of any required environment variables, optional variables you want to define, start-up commands or arguments, networking requirements, such as port numbers, and required storage configuration.

If your application requires specific directory paths, datasets, or other storage arrangements, configure them before you start the Install Custom App wizard.

You cannot save settings and exit the configuration wizard to create data storage or directories in the middle of the process. If you are unsure about any configuration settings, review the Install Custom App Screen UI reference article before creating a new container image.

To create directories in a dataset on TrueNAS, before you begin installing the container, open the TrueNAS CLI and enter

storage filesystem mkdir path="/PATH/TO/DIRECTORY".

We recommend setting up your storage, user, or other configuration requirements before beginning deployment. You should have access to information such as:

- The path to the image repository

- Any container entrypoint commands or arguments

- Container environment variables

- Network settings

- IP addresses and DNS nameservers

- Container and node port settings

- Storage volume locations

- Certificates for image security

To set up a new container image, first, determine if you want the container to use additional TrueNAS datasets. If yes, create the dataset(s) before you begin the app installation.

The custom app installation wizard provides four options for mounting app storage, see below. When deploying a custom app via YAML, refer to the Docker Storage documentation for guidance on mount options.

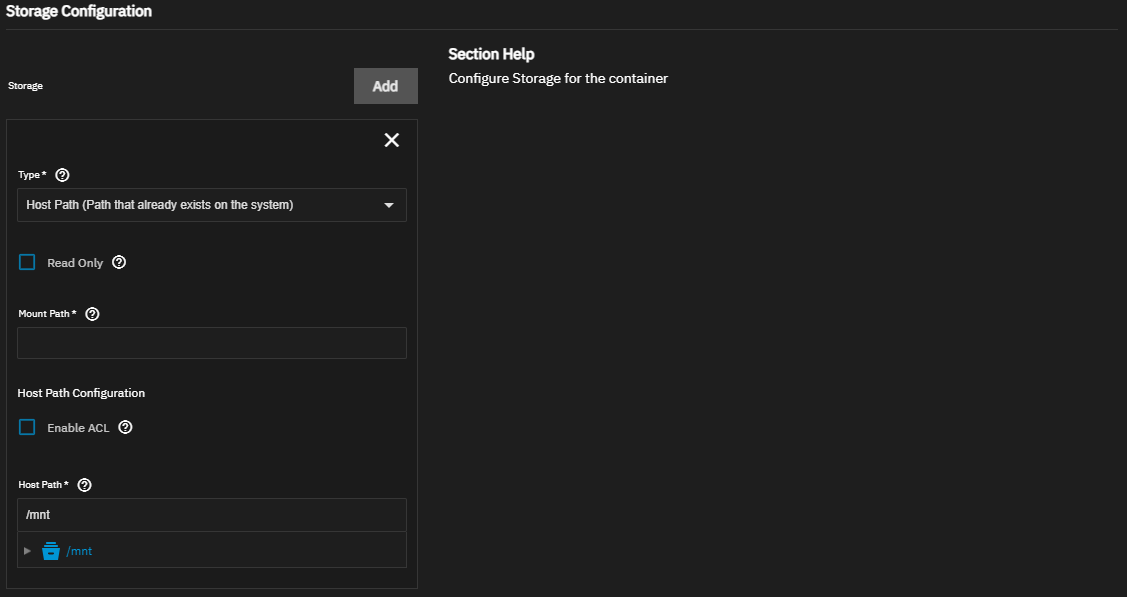

You can mount TrueNAS storage locations inside the container as host paths. A host path mounts a file or dataset into the container using Docker bind mounts. To mount a host path, define the path to the system storage and the container internal path for storage location to appear. You can also mount the storage as read-only to prevent using the container to change any stored data.

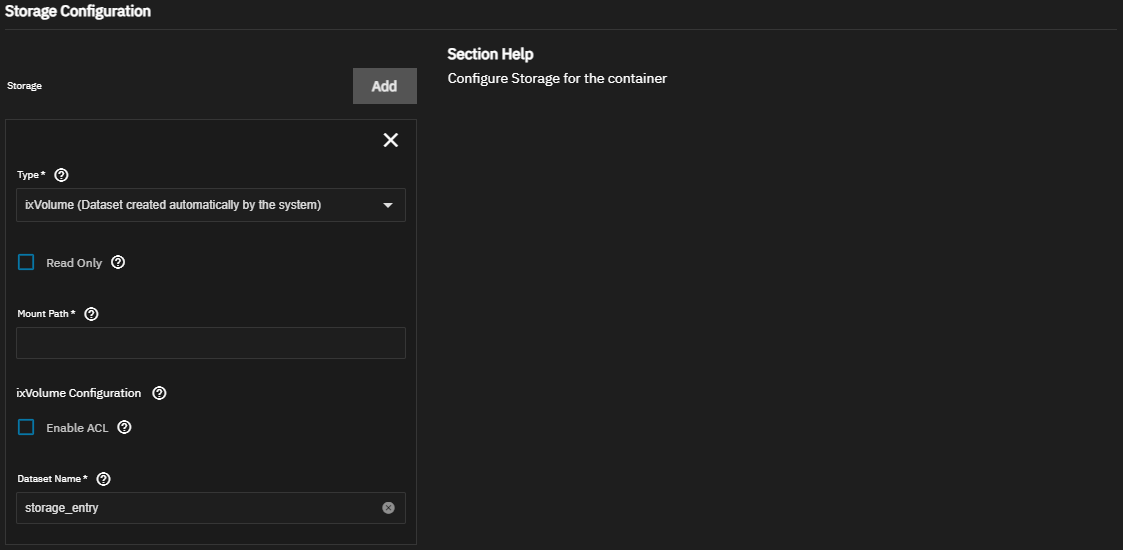

Use ixVolumes to allow TrueNAS to create a dataset on the apps storage pool. Like host paths, ixVolumes are mounted as Docker bind mounts and can be mounted read-only. In most use cases, ixVolume storage is better suited to app testing, while host volumes are better for production deployments.

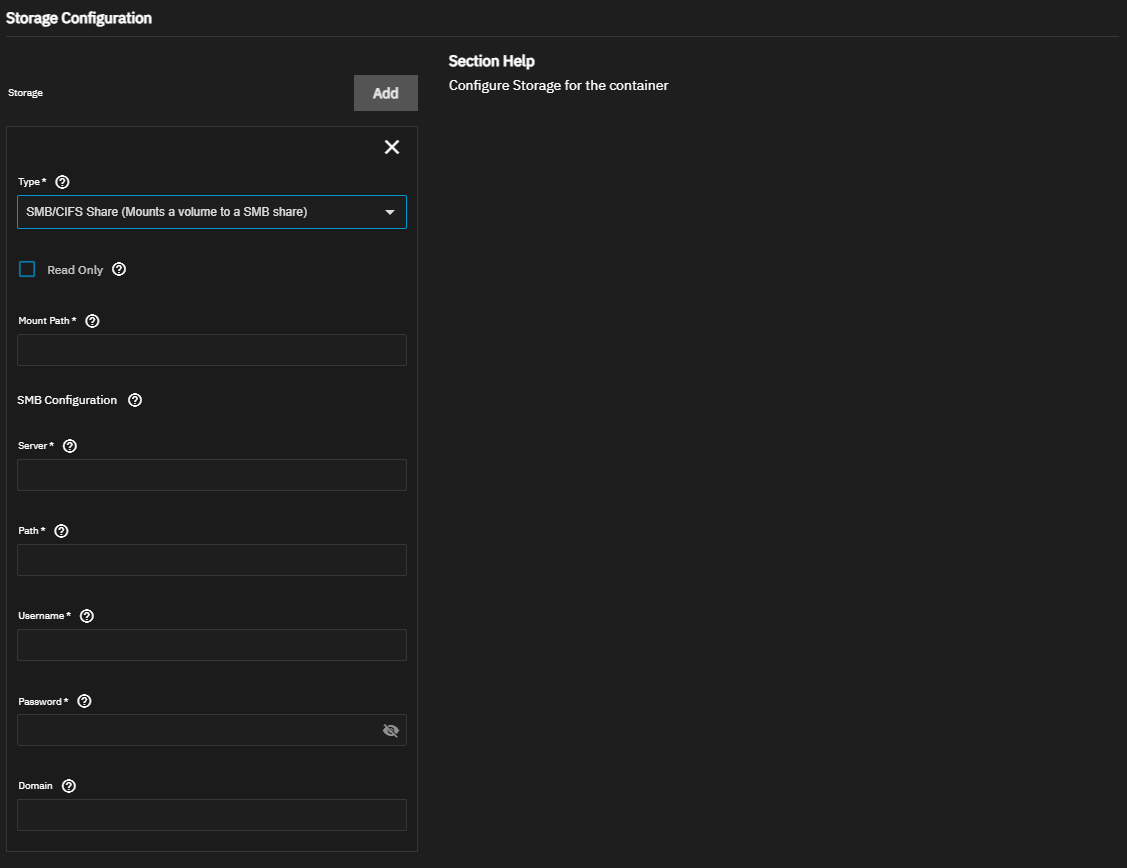

Users can mount an SMB share for storage within the container using Docker volumes. Volumes consume space from the pool chosen for application management. You need to define a path where the volume appears inside the container.

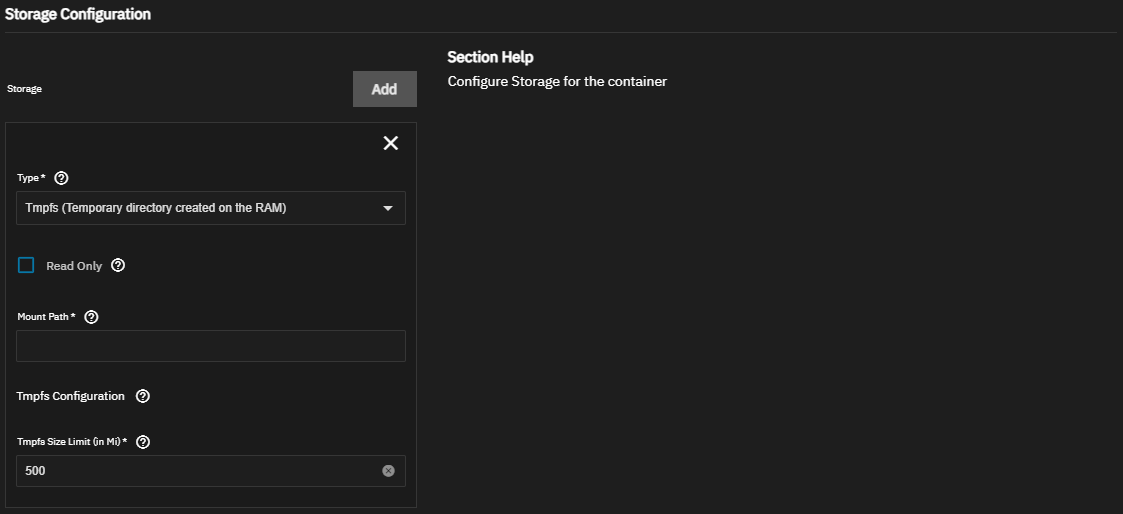

Memory-backed tmpfs storage provides a temporary, in-memory file system for storing data that only needs to persist for the lifetime of the container. It is mounted directly in RAM and automatically cleared when the container stops.

Memory-backed storage can store ephemeral data, especially when performance speed is a concern, such as caching, processing data that does not need persistent storage, or storing session data. For security purposes, you can store sensitive data like temporary credentials or data in tmpfs instead of writing to disk.

To deploy a third-party application using the Install iX App wizard, go to Apps, click Discover Apps, then click Custom App.

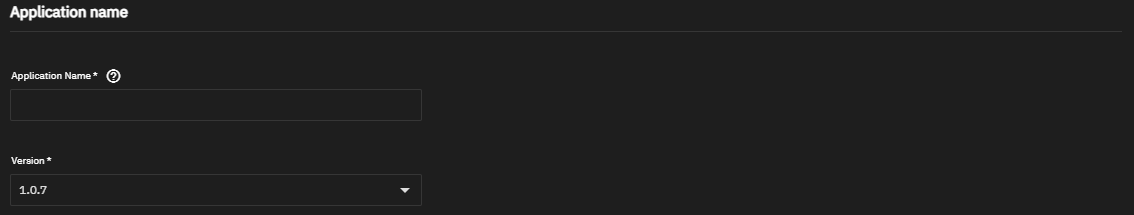

Enter a name for the container in Application Name. Accept the default number in Version.

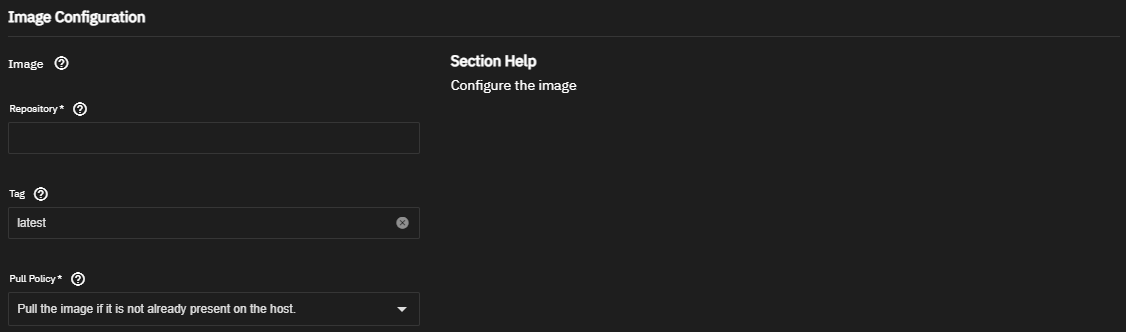

Enter the Docker Hub repository for the application you want to install in Image Configuration. Use the format

maintainer/image, for example storjlabs/storagenode, orimage, such as debian, for Docker Official Images.If the application requires it, enter the correct value in Tag and select the Pull Policy to use.

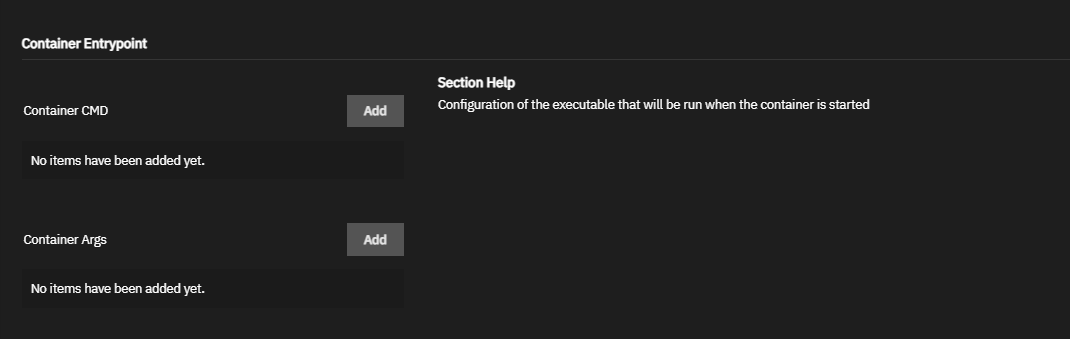

If the application requires it, define any Container Configuration settings to specify the entrypoint, commands, timezone, environment variables, and restart policy to use for the image. These can override any existing commands stored in the image.

Click Add to the right of Entrypoint to display an entrypoint field. Each field is an item in the ENTRYPOINT list in exec format. For example, to enter

ENTRYPOINT ["top", "-b"], entertopin the first Entrypoint field. Click Add again. Enter-bin the second field.Click Add to the right of Command to display a container command field. Each field is an item in the CMD list in exec format. For example, to enter

CMD ["echo", "hello world"], enterechoin the first Command field. Click Add again. Enterhello worldin the second field.Select the appropriate Timezone or begin typing the timezone to see a narrowed list of options to select from..

Click Add for Environment Variables to display a block of variable fields. Enter the environment variable name or key in Name, for example,

MY_NAME. Enter the value for the variable in Value for example,"John Doe",John\ Doe, orJohn. Click Add again to enter another set of environment variables.Use the dropdown to select a Restart Policy to use for the container.

If needed, select the options to disable the health check built into the container, enable a pseudo-TTY (or pseudo-terminal) for the container, or keep the standard input (stdin) stream for the container open, for example for an interactive application that needs to remain ready to accept input.

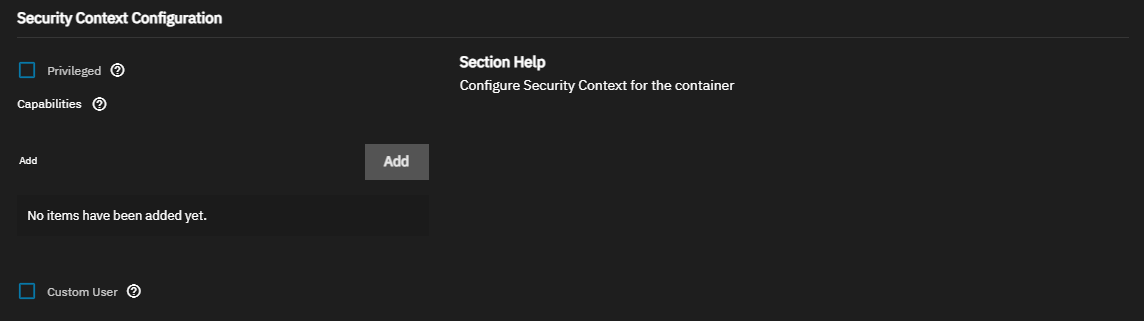

Enter any required settings in Security Context Configuration.

Select Privileged to run the container in privileged mode. By default, a container cannot access any devices on the host. With Privileged enabled, the container has access to all devices on the host, which allows the container nearly all the same access as processes running on the host. Be cautious if enabling privileged mode. A more secure solution is to use Capabilities to grant limited access to system processes as needed.

To grant access to specific processes, click Add under Capabilities to display a container capability field. Enter a Linux capability to enable, for example,

CHOWN. Click Add again to enter another capability. See Docker documentation for more information on Linux capabilities in Docker.If you need to define a user to run the container, select Custom User to display the User ID and Group ID fields. Enter the numeric UID and GID of the user that runs the container. The default UID/GID is 568/568 (apps/apps).

Enter the Network Configuration settings.

Select Host Network to bind the container to the TrueNAS network. When bound to the host network, the container does not have a unique IP address, so port mapping is disabled. See the Docker documentation for more details on host networking.

Use Ports to reroute container ports that default to the same port number used by another system service or container. See Default Ports for a list of assigned ports in TrueNAS. See the Docker Container Discovery documentation for more on overlaying ports.

Click Add to display a block of port configuration fields. Click again to add additional port mappings. Enter a Container Port number. Refer to the application documentation for default port values. Enter a Host Port number that is open on the TrueNAS system. Select either TCP or UDP for the transmission Protocol.

Use Custom DNS Setup to enter any DNS configuration settings required for your application. By default, containers use the DNS settings from the host system. You can change the DNS policy and define separate nameservers and search domains. See the Docker DNS services documentation for more details.

Use Nameservers to add one or more IP addresses to use as DNS servers for the container. Click Add to display a Nameserver entry field and enter the IP address. Click again to add another name server.

Use Search Domains to add one or more DNS domains to search non-fully qualified host names. Click Add to display a Search Domain field and enter the domain you want to configure, for example, mydomain.com. Click again to add another search domain. See the Linux search documentation for more information.

Use DNS Options to add one or more key-value pairs to control various aspects of query behavior and DNS resolution. Click Add to display an Option field and enter a key-value pair representing a DNS option and its value, for example, ndots:2. Click again to add another option. See the Linux options documentation for more information.

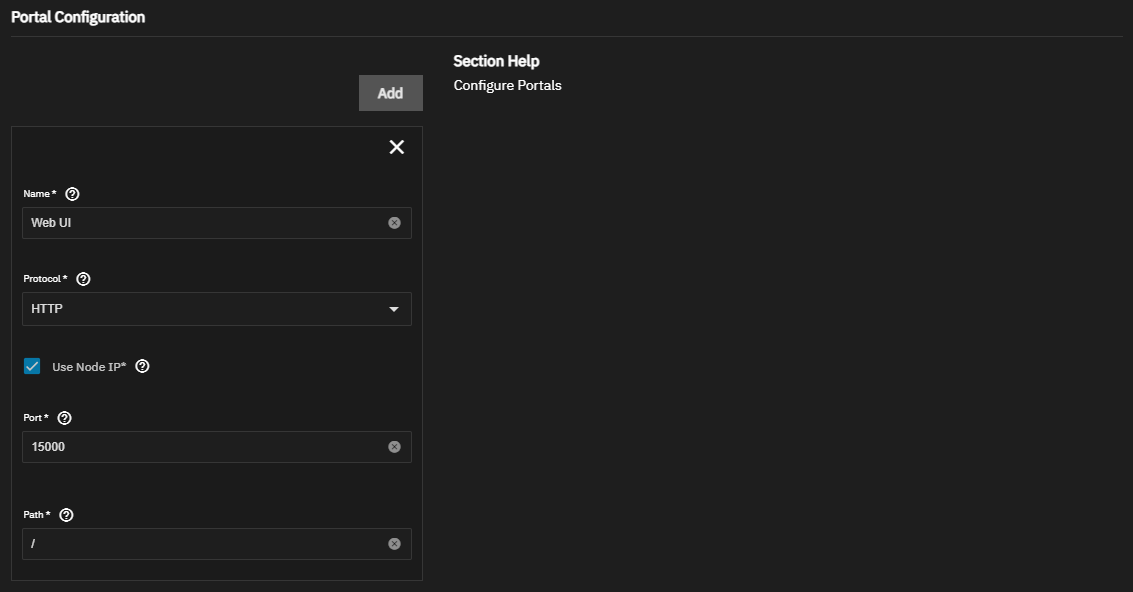

Use the Portal Configuration settings to set up a web UI portal if the application includes a GUI.

Click Add to display a block of web portal configuration settings.

Enter a Name for the portal, for example, MyAppPortal.

Select the web Protocol to use for the portal from the dropdown list. Options are HTTP or HTTPS.

Select Use Node IP to use the TrueNAS node or host IP address to access the portal or deselect to display the Host field. Then enter a host name or an internal IP within your local network, for example, my-app-service.local or an internal IP address.

Enter the Port number to use for portal access. Refer to the documentation provided by the application provider/developer for the port number the app uses. Check the port number against the list of Default Ports to make sure TrueNAS is not using it for some other purpose. If needed, you can map the default container port to an open host port under Network Configuration (see above).

If needed, enter a custom Path for portal access, for example, /admin. Defaults to /. The path is appended to the host IP and port, as in truenas.local:15000/admin.

Add the Storage settings. Review the image documentation for required storage volumes. See Setting up Storage above for more information.

Click Add to display a block of configuration settings for each storage mount. You can edit to add additional storage to the container later if needed.

Select the storage option you need to mount on the Type dropdown list and then configure the appropriate settings.

Accept the default values in Resources Configuration or enter new CPU and memory values. By default, this application is limited to use no more than 2 CPU cores and 4096 megabytes available memory. The application might use considerably less system resources.

To customize the CPU and memory allocated to the container the app uses, enter new CPU values as a plain integer value (letter suffix is not required). The default is 4096.

Accept the default value (4 Gb) allocated memory or enter a new limit in bytes. Enter a plain integer without the measurement suffix, for example, 129 not 129M or 123MiB.

Use GPU Configuration to allocate GPU resources if available and required for the application.

Click Install to deploy the container. If you correctly configured the app, the widget displays on the Installed Applications screen.

When complete, the container becomes active. If the container does not automatically start, click Start on the widget.

Click on the App card to reveal details.

Installing custom applications via YAML requires advanced knowledge of Docker Compose and YAML Syntax. Users should be prepared to troubleshoot and debug their own installations. The TrueNAS forums are available for community support.

TrueNAS applies basic YAML syntax validation to custom applications, but does not apply additional validation of configuration parameters before executing the file as written.

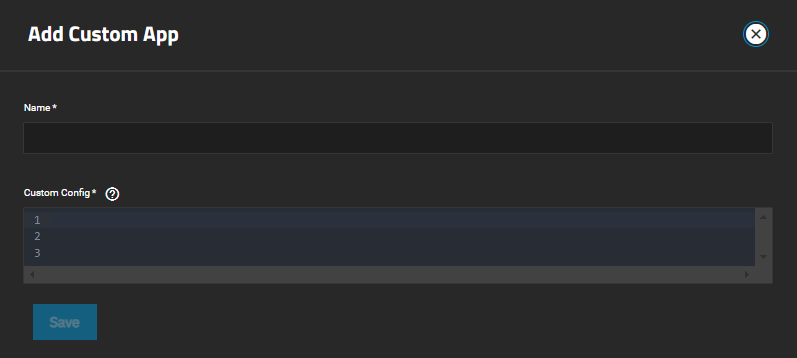

To install a third-party application using a Docker Compose YAML file, go to Apps > Discover. Click more_vert then select Install via YAML to open the Add Custom App screen.

Enter a name for the application using lowercase, alphanumeric characters.

Enter the Compose YAML file in Custom Config.

Begin the Compose file with a top-level element, such as name: or services:.

See the Docker Compose file reference for more information.

As Compose files can be complex and YAML relies on indentation and whitespace to define structure and hierarchy, we recommend writing the file in a stand-alone code editor before pasting the completed content into the Custom Config field.

Click Save to begin deploying the app.

The following examples represent some of the capabilities of Docker Compose and how it can be used to configure applications in TrueNAS. This is not an exhaustive collection of all capabilities, nor are the files below intended as production-ready deployment templates.

Note that app storage in these examples is configured using volumes, which are managed by the Docker engine. In a production deployment, configure bind mounts to TrueNAS storage locations.

This example deploys Immich with multiple containers, including pgvecto and redis.

It also enables GPU access for an NVIDIA GPU device.

| |

This example deploys Nextcloud with multiple containers, including redis and postgres.

A Dockerfile builds the Nextcloud image locally, rather than pulling from a remote repository.

You can use an existing Dockerfile or, as in the example, use dockerfile_inline to define the build commands as an inline string within the Compose file.

| |

Alternatively, you can use dockerfile to set an existing Dockerfile stored on your TrueNAS system.

To specify the absolute path to a Dockerfile, remove this dockerfile_inline section of the build element:

| |

Replace it with the absolute path to the Dockerfile, for example:

| |

Note: an application with an absolute path to a Dockerfile is not portable without modification.

These examples deploy two applications, Plex and Tautulli, as part of the same custom Docker network. Grouping Docker applications offers advantages for organizing, securing, and optimizing communication between containers that interact. You can use Docker networks to isolate network segmentation, simplify communication between apps, configure networking properties, and more.

Plex

| |

Tautulli

| |

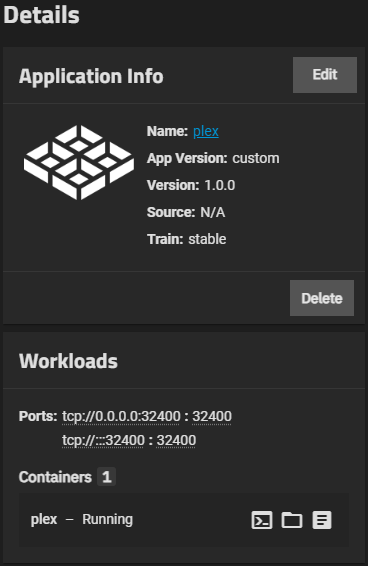

Installed custom applications show on the Installed applications screen. Many of the management options available for catalog applications are also available for custom apps.

TrueNAS monitors upstream images and alerts when an updated version is available. Update custom applications using the same Upgrading Apps procedure as catalog applications.

Custom applications installed via YAML do not include the Web Portal button on the Application Info widget. To access the web UI for a custom app, navigate to the port on the TrueNAS system, for example, hostname.domain:8080.

Click Edit to edit and redeploy the application.

Click Delete to remove the application. See Deleting Apps for more information.

The Workloads widget shows ports and container information. Each container includes buttons to access a container shell, view volume mounts, and view logs.

Click Shell and enter an option in the Choose Shell Details window to access a container shell.

Click folder_open to view configured storage mounts for the container.

Click Logs to open the Container Logs window. Select options in Logs Details and click Connect to view logs for the container.