TrueNAS Apps: Tutorials

Application maintenance is independent from TrueNAS version release cycles.

App versions, features, options, and installation behavior at time of access might vary from documented tutorials and UI reference.

Setting Up MinIO Clustering

8 minute read.

We welcome community contributions to keep this documentation current! Click Edit Page in the top right corner to propose changes to this article. See Updating Content for more information.

This article applies to the public release of the TrueNAS S3 MinIO stable train application configured in a distributed mode cluster.

TrueNAS 23.10 and later allows users to create a MinIO S3 distributed instance to scale out TrueNAS to handle individual node failures. A node is a single TrueNAS storage system in a cluster.

The stable train version of MinIO supports distributed mode. Distributed mode, allows pooling multiple drives, even on different systems, into a single object storage server. For information on configuring a distributed mode cluster in TrueNAS using MinIO, see Setting Up MinIO Clustering.

The enterprise train version of MinIO provides two options for clustering, Single Node Multi-Disk (SNMD) and Multi-Node Multi-Disk (MNMD) configurations. See MinIO Enterprise for more information.

The examples below use four TrueNAS systems to create a distributed cluster. For more information on MinIO distributed setups, refer to the MinIO documentation.

Before you install the stable version of the MinIO app:

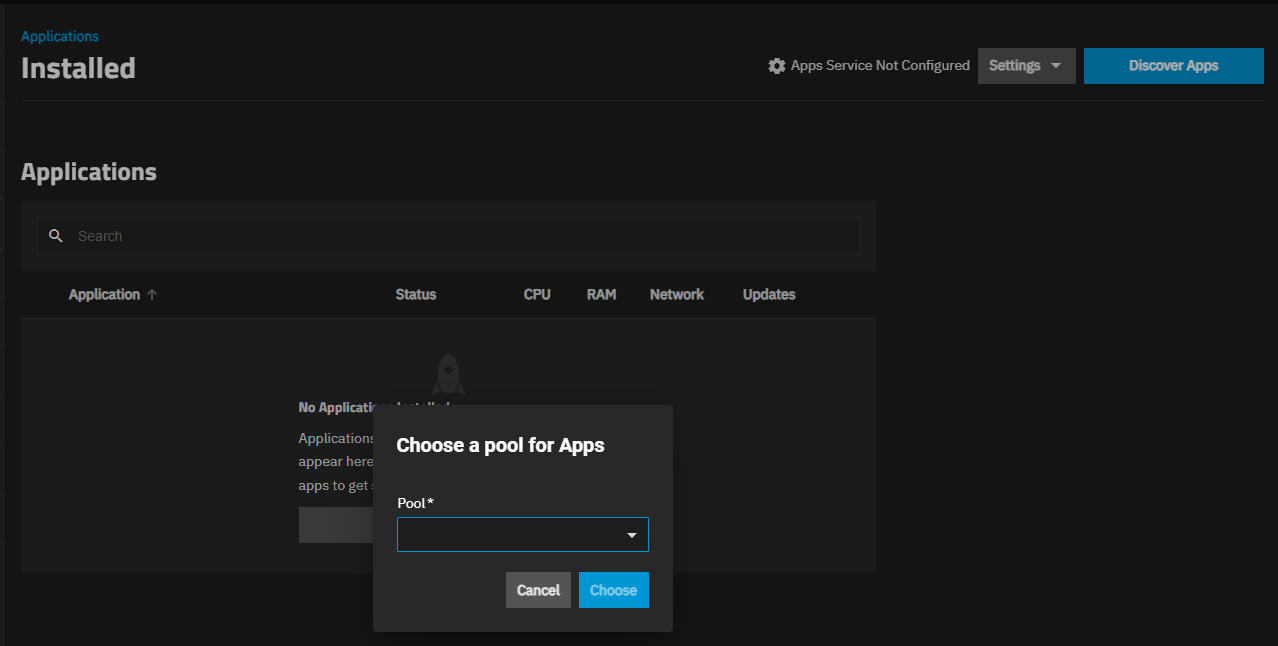

Set a pool for applications to use if not already assigned.

You can use either an existing pool or create a new one. TrueNAS creates the ix-apps (hidden) dataset in the pool set as the application pool. This dataset is internally managed, so you cannot use this as the parent when you create required application datasets.

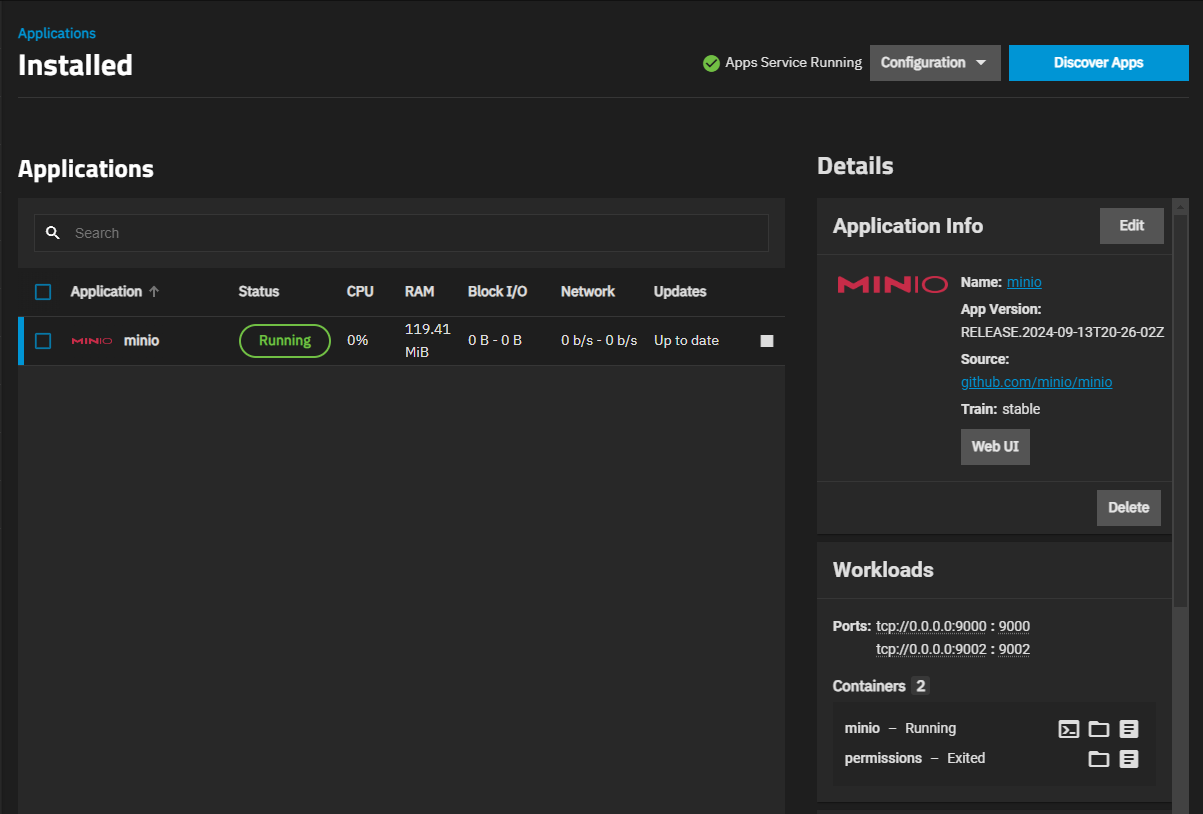

After setting the pool, the Installed Applications screen displays Apps Service Running on the top screen banner.

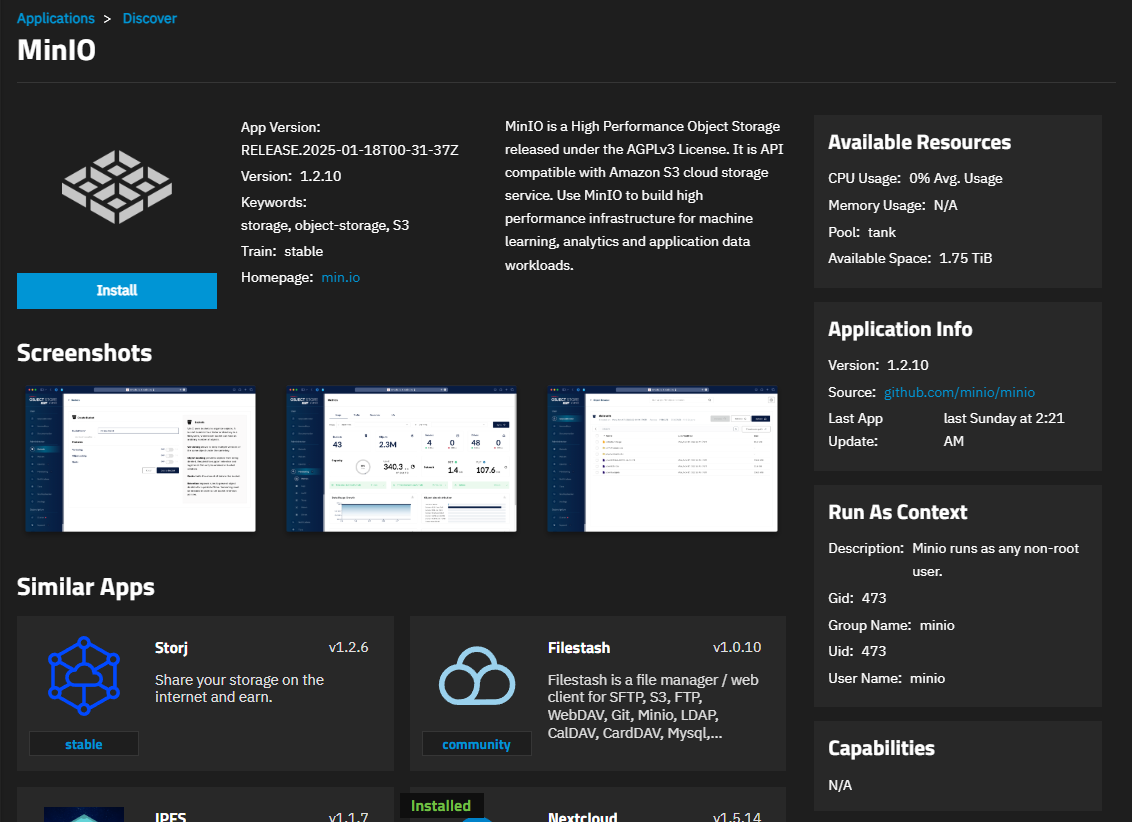

Locate the run-as user for the app.

Take note of the run-as user for the app, shown on the app information screen in the Run As Context widget and in the Application Metadata widget on the Installed applications screen after the app fully deploys. The run-as user(s) get added to the ACL permissions for each dataset used as a host path storage volume.

(Optional) Create datasets for the storage volumes for the app.

Do not create encrypted datasets for apps if not required! Using an encrypted dataset can result in undesired behaviors after upgrading TrueNAS when pools and datasets are locked. When datasets for the containers are locked, the container does not mount, and the apps do not start. To resolve issues, unlock the dataset(s) by entering the passphrase/key to allow datasets to mount and apps to start.You can create required datasets before or after launching the installation wizard. The install wizard includes the Create Dataset option for host path storage volumes, but if you are organizing required datasets under a parent you must create that dataset before launching the app installation wizard.

Go to Datasets and select the pool or dataset where you want to place the dataset(s) for the app. For example, /tank/apps/appName.

If your system has active sharing configurations (SMB, NFS, iSCSI), disable them in System > Services before adding and configuring the MinIO application. Start any sharing services after MinIO completes the installation and starts.

Create a self-signed certificate for the app (if required).

Begin on the first node (system) in your cluster.

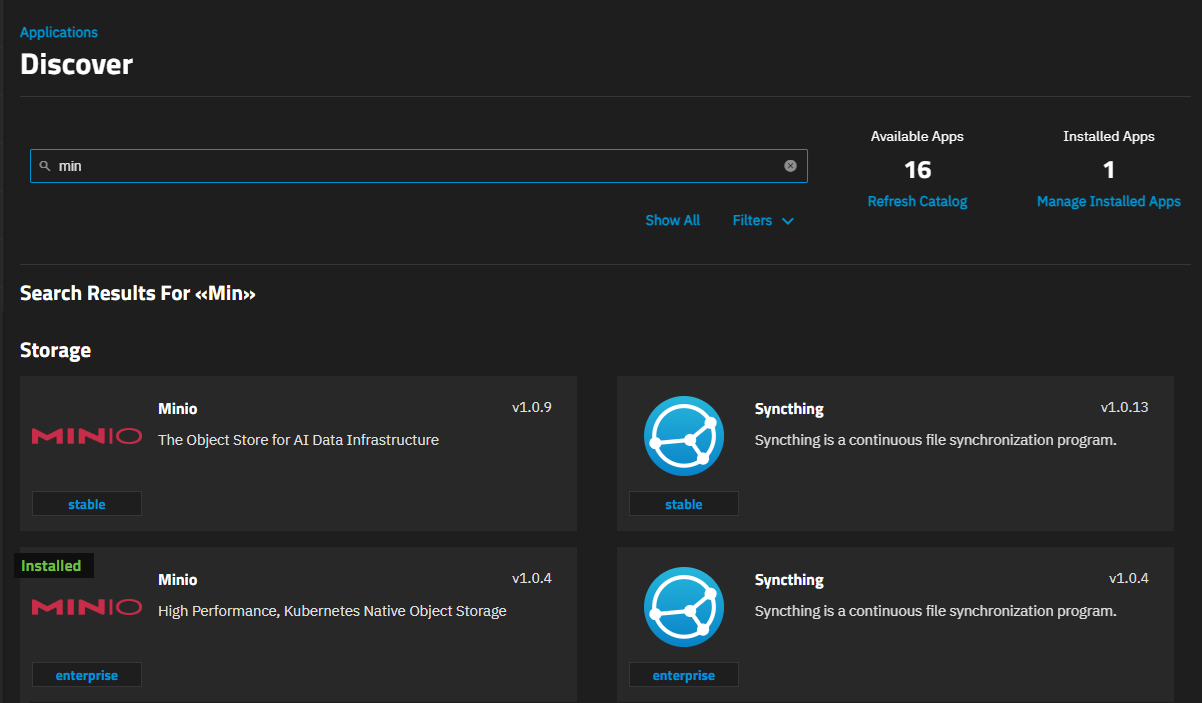

Go to Apps, click on Discover Apps, and locate the app widget by either scrolling down to it or begin typing the name into the search field. For example, to locate the MinIO app widget, begin typing minIO into the search field to show app widgets matching the search input.

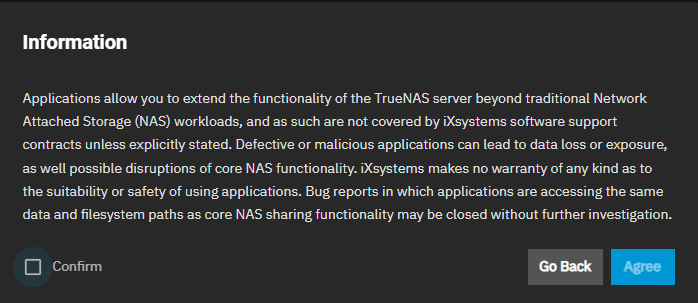

If this is the first application installed, TrueNAS displays a dialog about configuring apps.

If not the first time installing apps the dialog does not show, click on the widget to open the app information screen.

Click Install to open the app installation wizard.

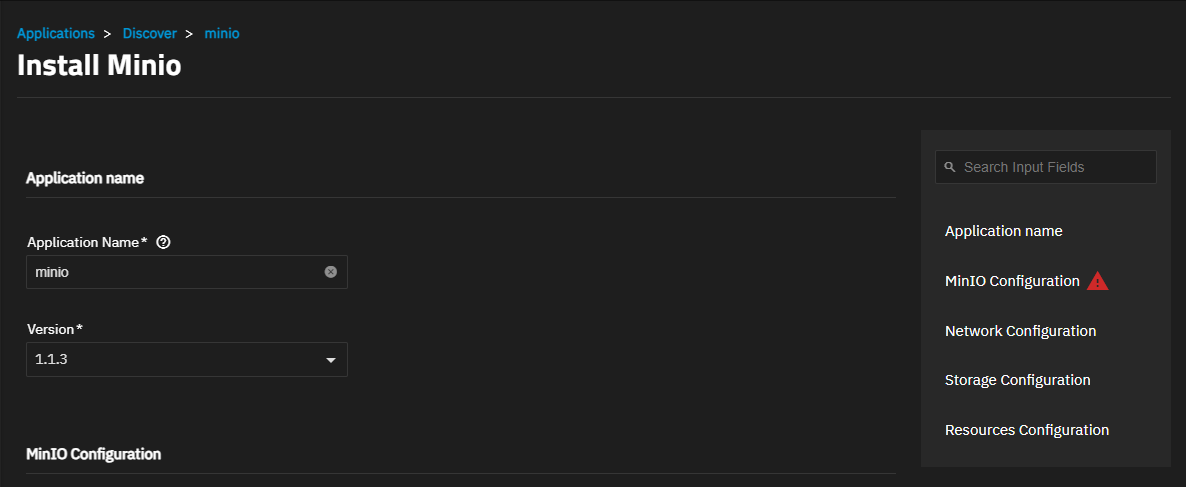

Application configuration settings are grouped into several sections, each explained below in Understanding App Installation Wizard Settings. To find specific fields begin typing in the Search Input Fields search field to show the section or field, scroll down to a particular section, or click on the section heading in the list of sections on the upper-right of the wizard screen.

Accept the default value or enter a name in Application Name field. In most cases use the default name, but if adding a second deployment of the application you must change this name.

Accept the default version number in Version. When a new version becomes available, the application shows an update badge and the Application Info widget on the Installed applications screen shows the Update button.

You can have multiple deployments of the same app (for example, two or more from the stable or enterprise trains, or a combination of the stable and enterprise trains).

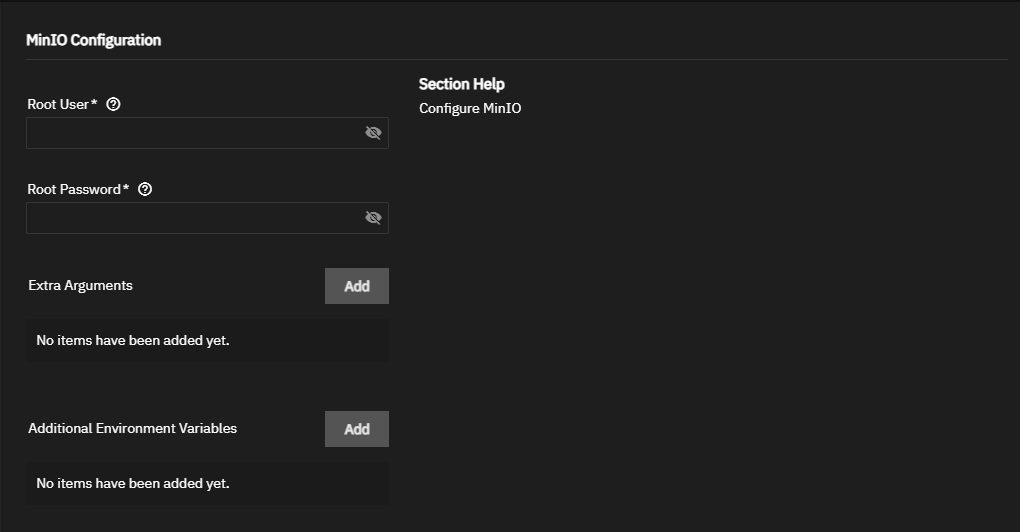

Next, enter the MinIO Configuration settings.

The MinIO wizard defaults include all the arguments you need to deploy a container for the application.

Enter a name in Root User to use as the MinIO access key. Enter a name of five to 20 characters in length, for example admin or admin1. Next enter the Root Password to use as the MinIO secret key. Enter eight to 40 random characters, for example MySecr3tPa$$w0d4Min10.

Refer to MinIO User Management for more information.

Keep all passwords and credentials secured and backed up.

MinIO containers use server port 9000. The MinIO Console communicates using port 9001.

You can configure the API and UI access node ports and the MinIO domain name if you have TLS configured for MinIO.

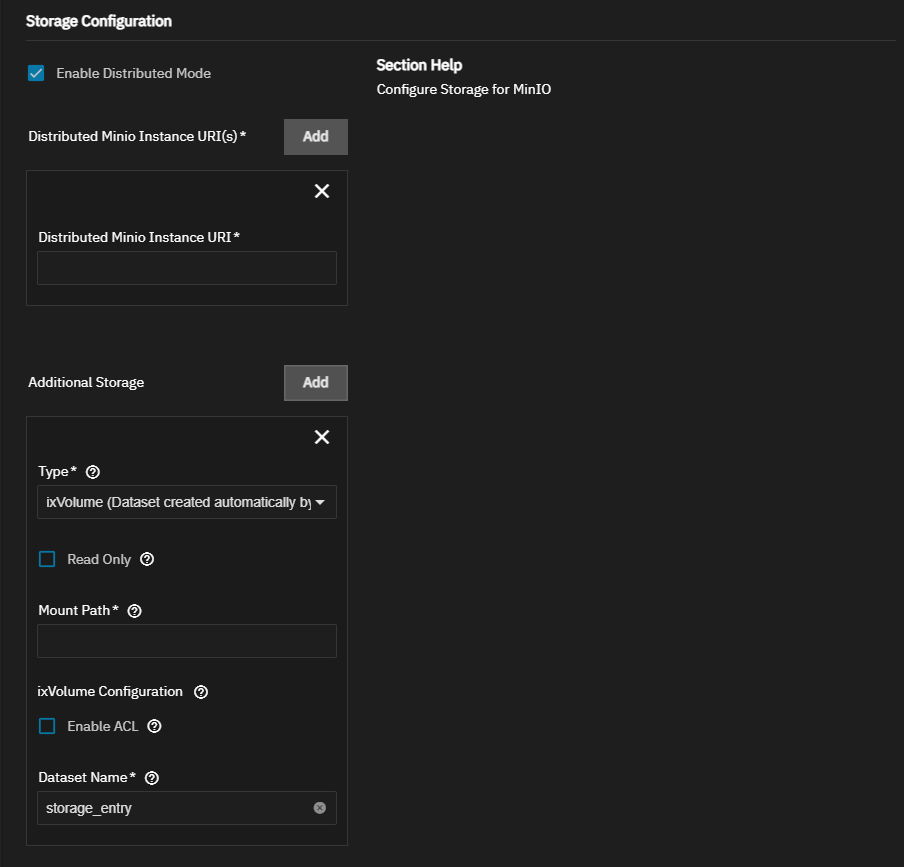

Add your Storage Configuration settings.

For a distributed cluster, ensure the values are identical between server nodes and that they have the same credentials.

MinIO uses one dataset, one ixVolume, and two mount paths. Leave the MinIO Export Storage (Data) set to the defaults, with Type set to ixVolume and the mount path /export. You can create a dataset for this and use the host path option but it is not necessary for this storage volume.

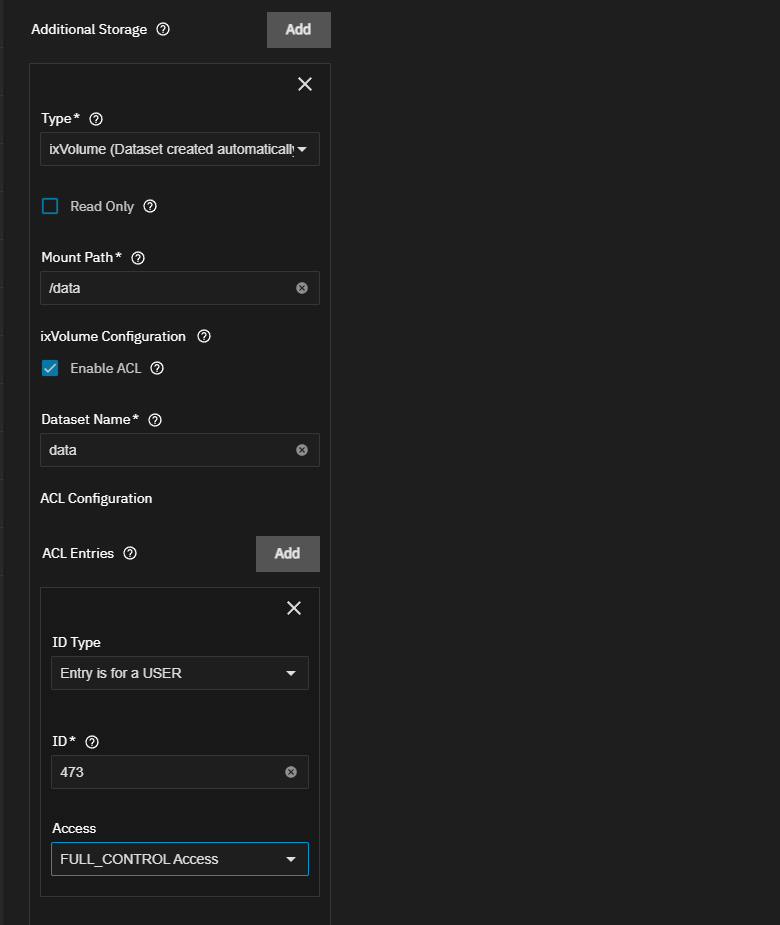

Add the storage volume for MinIO data storage. Click Add to the right of Additional Storage. Set Type to Host Path (Path that already exists on the system). Enter /data in Mount Path, select Enable ACL, then enter data in Dataset Name.

Click Add to the right of ACL Entries to show the permissions fields. Set Id Type to Entry is for a USER, enter 473 in ID, and give it full permissions. Repeat for the /data storage volume.

Accept the default values in Resources Configuration.

Click Install.

The Installed applications screen opens showing the application in the Deploying state before it changes to Running when the application is ready to use.

Click Web Portal to open the MinIO sign-in screen.

After completing the first node, begin configuring the remaining system nodes in the cluster (including datasets and directories).

After installing MinIO on all systems (nodes) in the cluster, start the MinIO applications.