TrueNAS SCALE Nightly Development Documentation

This content follows experimental early release software. Use the Product and Version selectors above to view content specific to a stable software release.

Setting Up MinIO Clustering

6 minute read.

Last Modified 2024-03-15 13:07 EDTThis article applies to the public release of the S3 MinIO charts application in the TRUENAS catalog.

Community applications are created and maintained by members of the TrueNAS community. Similarly, community members actively maintain application articles in this section. Click Edit Page in the top right corner to propose changes to this article.

On TrueNAS SCALE 23.10 and later, users can create a MinIO S3 distributed instance to scale out and handle individual node failures. A node is a single TrueNAS storage system in a cluster.

The examples below use four TrueNAS systems to create a distributed cluster. For more information on MinIO distributed setups, refer to the MinIO documentation.

Before configuring MinIO, create a dataset and shared directory for the persistent MinIO data.

Go to Datasets and select the pool or dataset where you want to place the MinIO dataset. For example, /tank/apps/minio or /tank/minio. You can use either an existing pool or create a new one.

After creating the dataset, create the directory where MinIO stores information the application uses. There are two ways to do this:

In the TrueNAS SCALE CLI, use

storage filesystem mkdir path="/PATH/TO/minio/data"to create the /data directory in the MinIO dataset.In the web UI, create a share (i.e. an SMB share), then log into that share and create the directory.

MinIO uses /data but allows users to replace this with the directory of their choice.

For a distributed configuration, repeat this on all system nodes in advance.

Take note of the system (node) IP addresses or host names and have them ready for configuration. Also, have your S3 user name and password ready for later.

Configure the MinIO application using the full version Minio charts widget. Go to Apps, click Discover Apps then

We recommend using the Install option on the MinIO application widget.

If your system has sharing (SMB, NFS, iSCSI) configured, disable the share service before adding and configuring a new MinIO deployment. After completing the installation and starting MinIO, enable the share service.

If the dataset for the MinIO share has the same path as the MinIO application, disable host path validation before starting MinIO. To use host path validation, set up a new dataset for the application with a completely different path. For example, for the share /pool/shares/minio and for the application /pool/apps/minio.

Begin on the first node (system) in your cluster.

To install the S3 MinIO (community app), go to Apps, click on Discover Apps, then either begin typing MinIO into the search field or scroll down to locate the charts version of the MinIO widget.

Click on the widget to open the MinIO application information screen.

Click Install to open the Install MinIO screen.

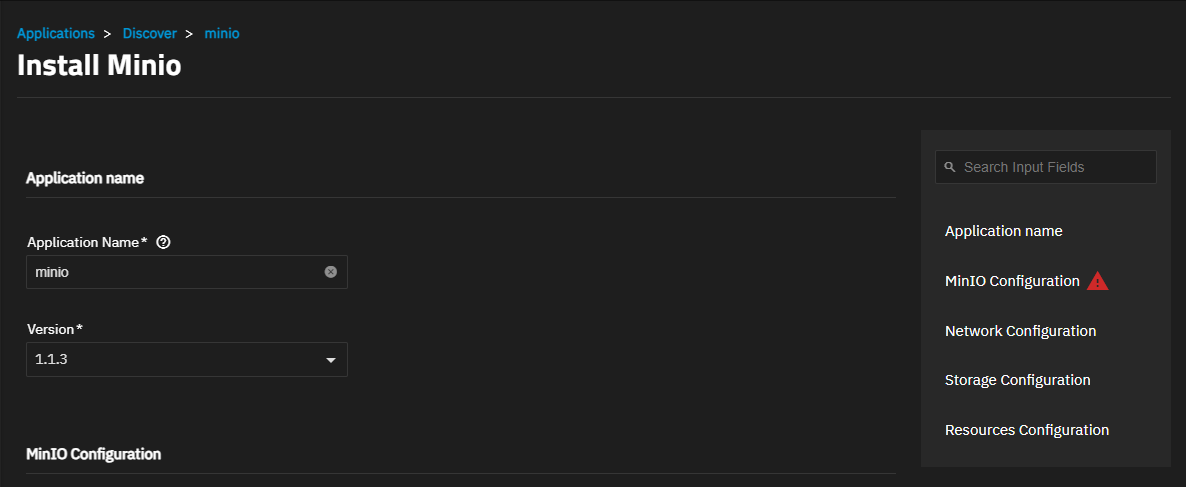

Accept the default values for Application Name and Version. The best practice is to keep the default Create new pods and then kill old ones in the MinIO update strategy. This implements a rolling upgrade strategy.

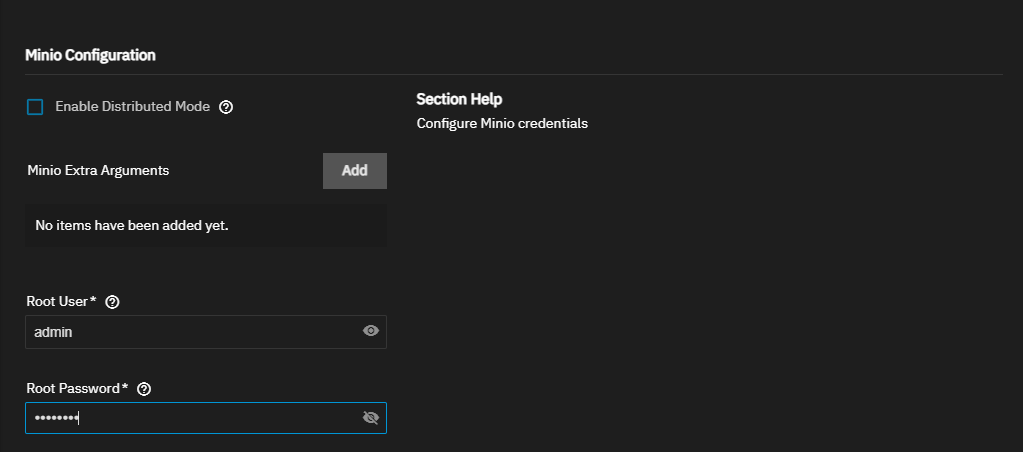

Next, enter the MinIO Configuration settings.

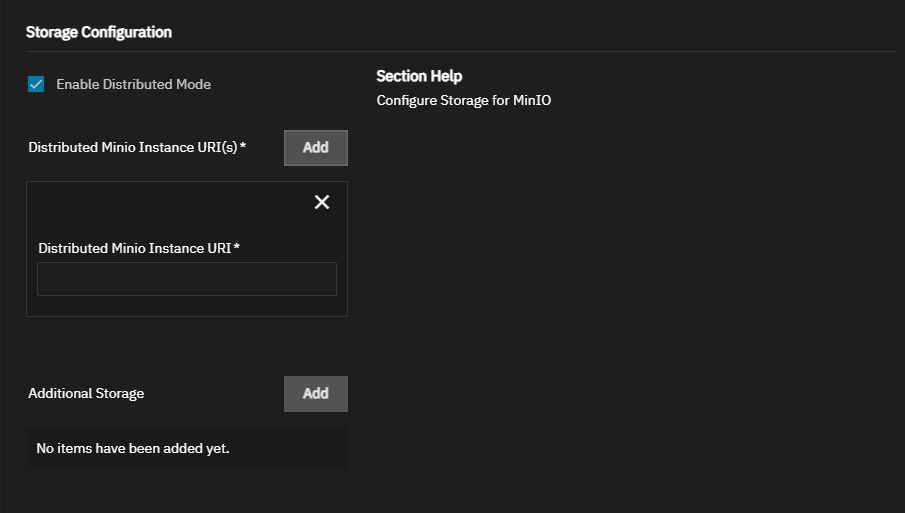

Select Enable Distributed Mode when setting up a cluster of SCALE systems in a distributed cluster.

MinIO in distributed mode allows you to pool multiple drives or TrueNAS SCALE systems (even if they are different machines) into a single object storage server for better data protection in the event of single or multiple node failures because MinIO distributes the drives across several nodes. For more information, see the Distributed MinIO Quickstart Guide.

To create a distributed cluster, click Add to show the Distributed MinIO Instance URI(s) fields for each TrueNAS system (node) IP addresses/host names to include in the cluster. Use the same order across all the nodes.

The MinIO application defaults include all the arguments you need to deploy a container for the application.

Enter a name in Root User to use as the MinIO access key. Enter a name of five to 20 characters in length, for example admin or admin1. Next enter the Root Password to use as the MinIO secret key. Enter eight to 40 random characters, for example MySecr3tPa$$w0d4Min10.

Refer to MinIO User Management for more information.

Keep all passwords and credentials secured and backed up.

For a distributed cluster, ensure the values are identical between server nodes and have the same credentials.

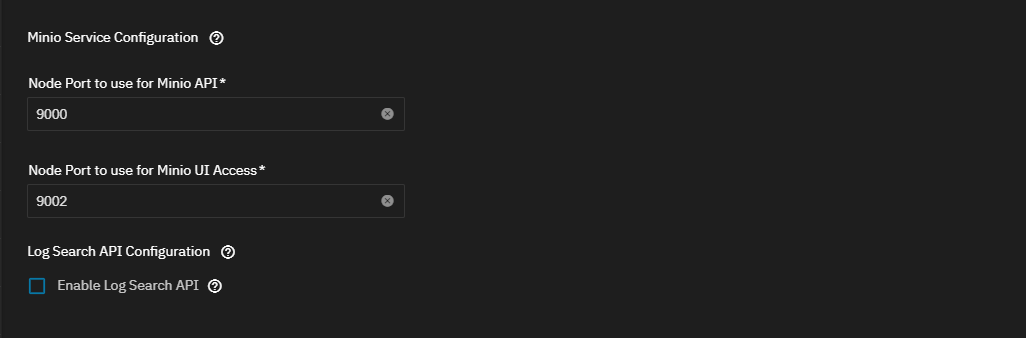

MinIO containers use server port 9000. The MinIO Console communicates using port 9001.

You can configure the API and UI access node ports and the MinIO domain name if you have TLS configured for MinIO.

To store your MinIO container audit logs, select Enable Log Search API and enter the amount of storage you want to allocate to logging. The default is 5 disks.

You can also configure a MinIO certificate.

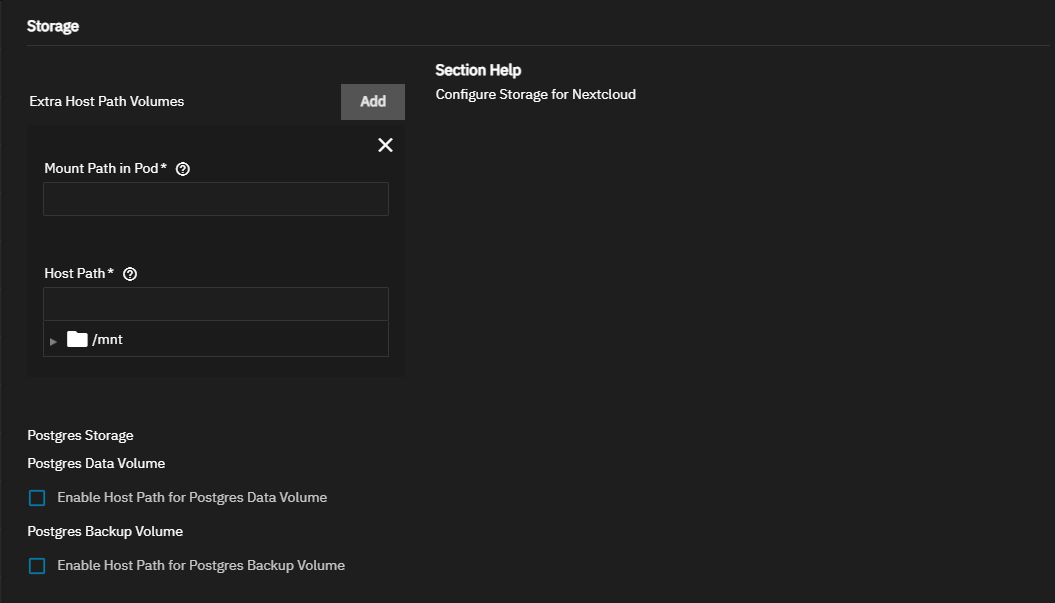

Configure the storage volumes. Accept the default /export value in Mount Path. Click Add to the right of Extra Host Path Volumes to add a data volume for the dataset and directory you created above. Enter the /data directory in Mount Path in Pod and the dataset you created in the First Steps section in Host Path.

Accept the defaults in Advanced DNS Settings.

If you want to limit the CPU and memory resources available to the container, select Enable Pod resource limits then enter the new values for CPU and/or memory.

Click Install when finished entering the configuration settings.

Now that the first node is complete, configure any remaining nodes (including datasets and directories).

After installing MinIO on all systems (nodes) in the cluster, start the MinIO applications.

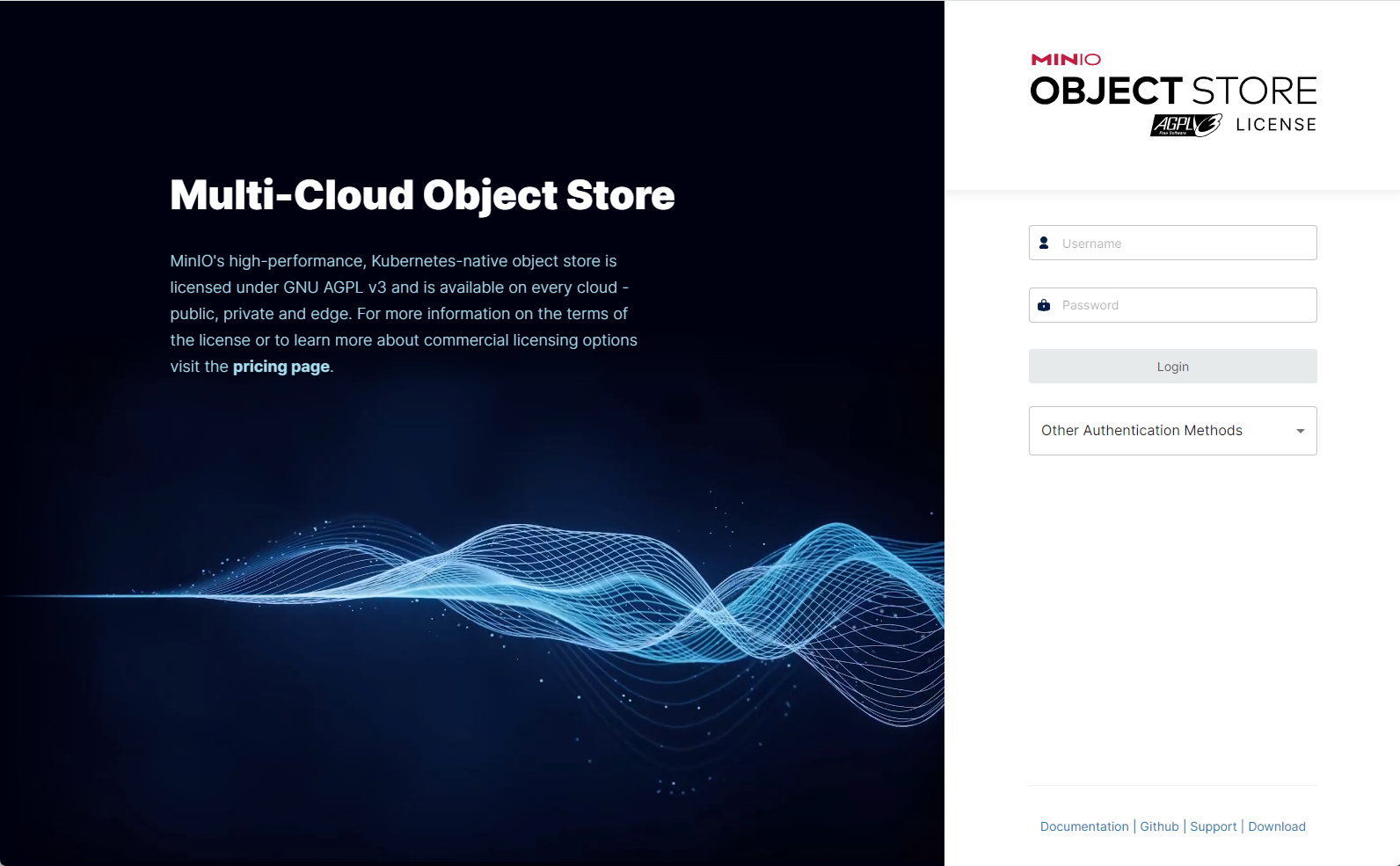

After you create datasets, you can navigate to the TrueNAS address at port :9000 to see the MinIO UI. After creating a distributed setup, you can see all your TrueNAS addresses.

Log in with the MINIO_ROOT_USER and MINIO_ROOT_PASSWORD keys you created as environment variables.

Click Web Portal to open the MinIO sign-in screen.